Merino

Merino is a service that provides address bar suggestions and curated recommendations to Firefox. Some of this content comes from third party providers. In this case, Merino serves as a privacy preserving buffer. User input in the address bar is handled by Merino and any clicked impression will be delegated to a Mozilla-controlled service which will then send an interaction ping if defined in the request and not to a provider directly. See API documentation for more details.

Table of Contents

api.md - API Documentation describes endpoints, query parameters, request and response headers, response objects and details on the suggestion objects.

firefox.md - Firefox and Merino Environments describes how to enable Merino in Firefox and lists the endpoints for the service in Production, State and Dev.

data.md - Data, Metrics, Logging describes all metrics and logs.

dev/index.md - Basic Developer Docs describes basics of working on Merino.

dev/dependencies.md - Development Dependencies describes the development dependencies required for Merino.

dev/logging-and-metrics.md - Logging and Metrics describes metrics, logging, and telemetry.

dev/release-process.md - Release Process describes the release process of Merino in detail.

dev/testing.md - Testing describes unit, integration and load tests for Merino.

dev/profiling.md - Profiling describes how to profile Merino to address performance issues.

operations/configs.md - Configuring Merino describes configuration management of the project, Dynaconf setup, and the configuration of the HTTP server, logging, metrics, Remote Settings, and Sentry.

operations/elasticsearch.md - Elasticsearch Operations describes some functionality and operations that we do on the Elasticsearch cluster.

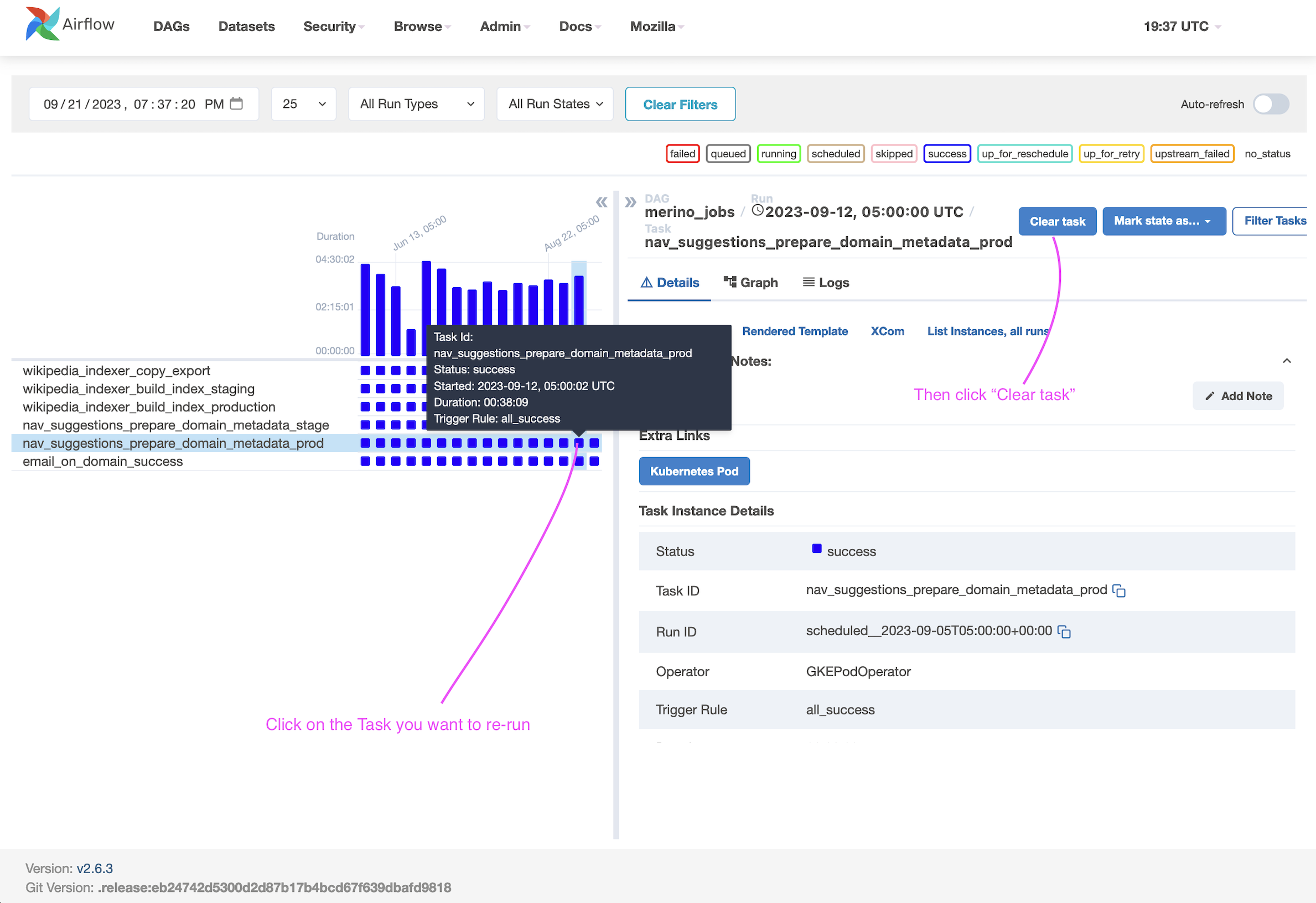

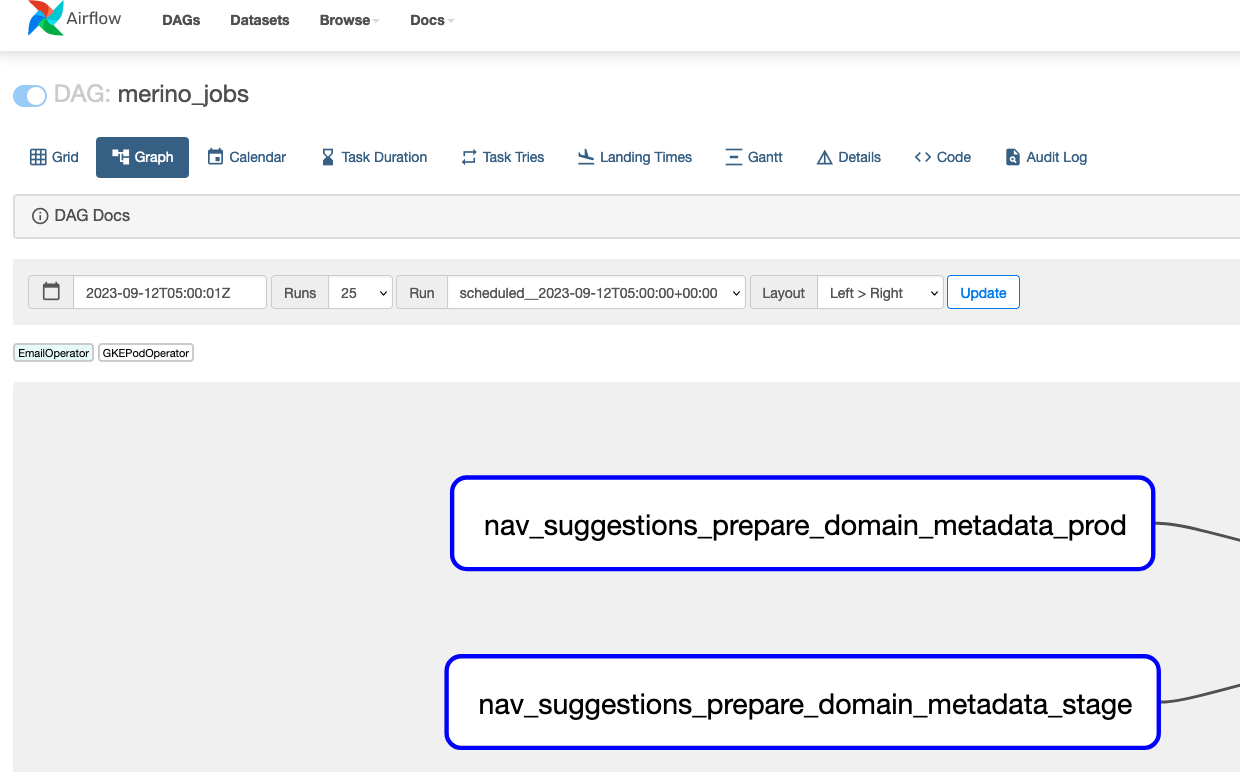

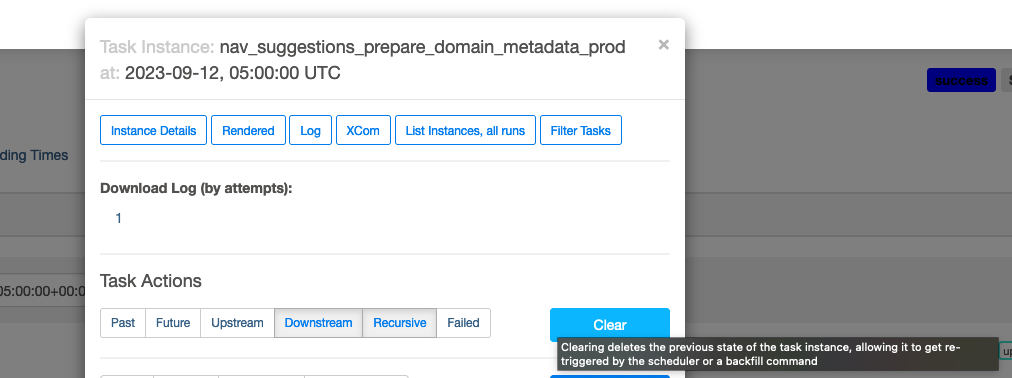

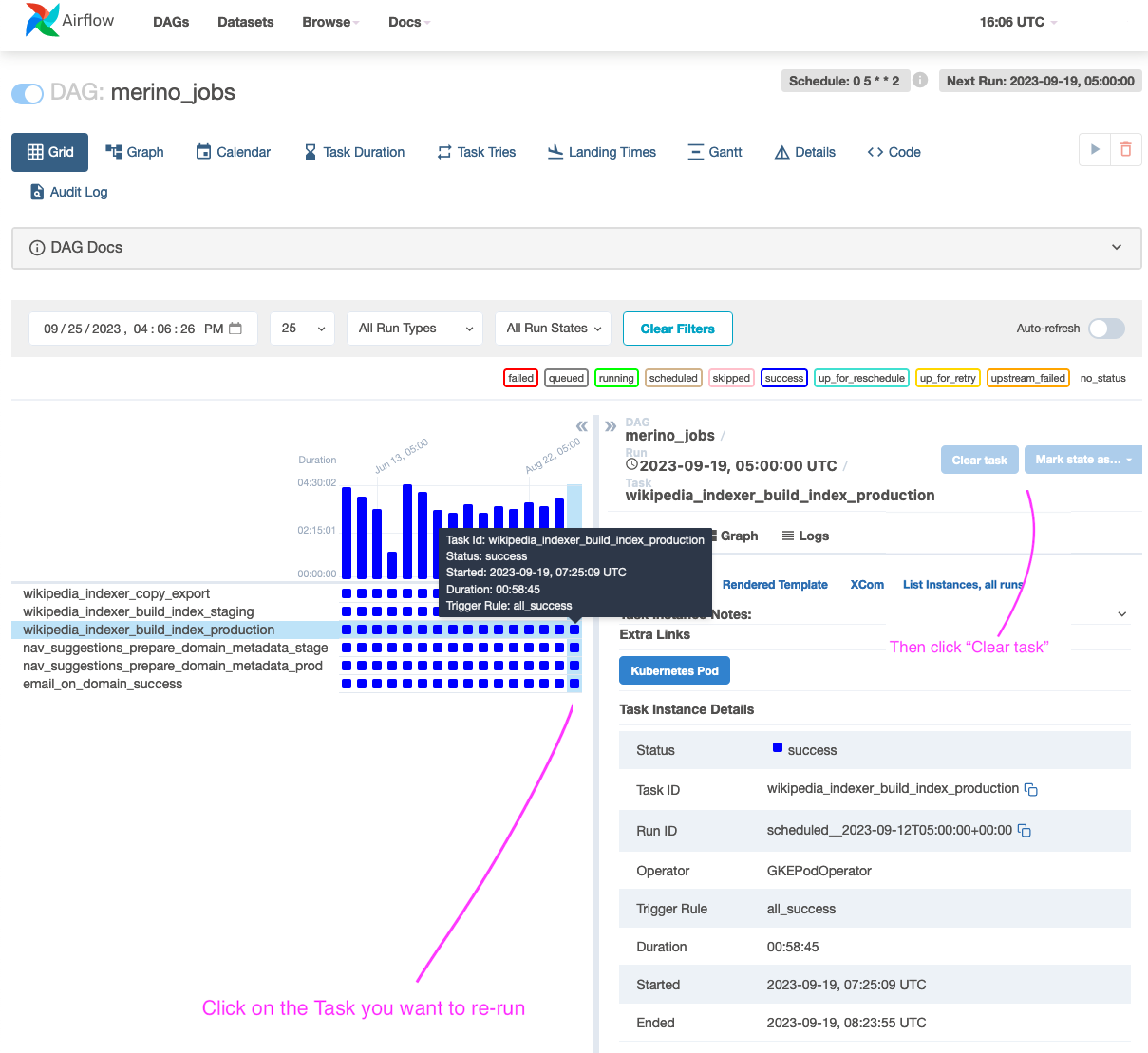

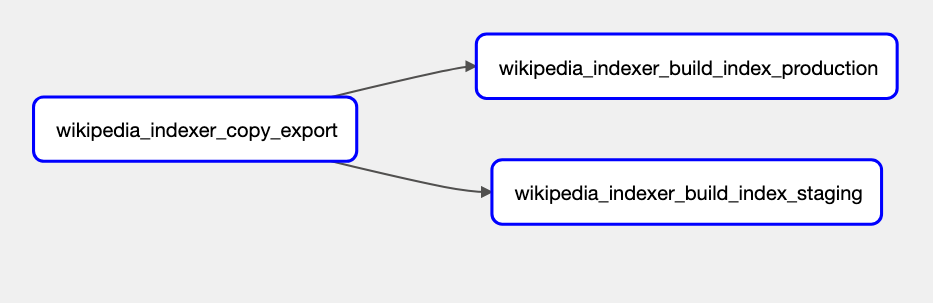

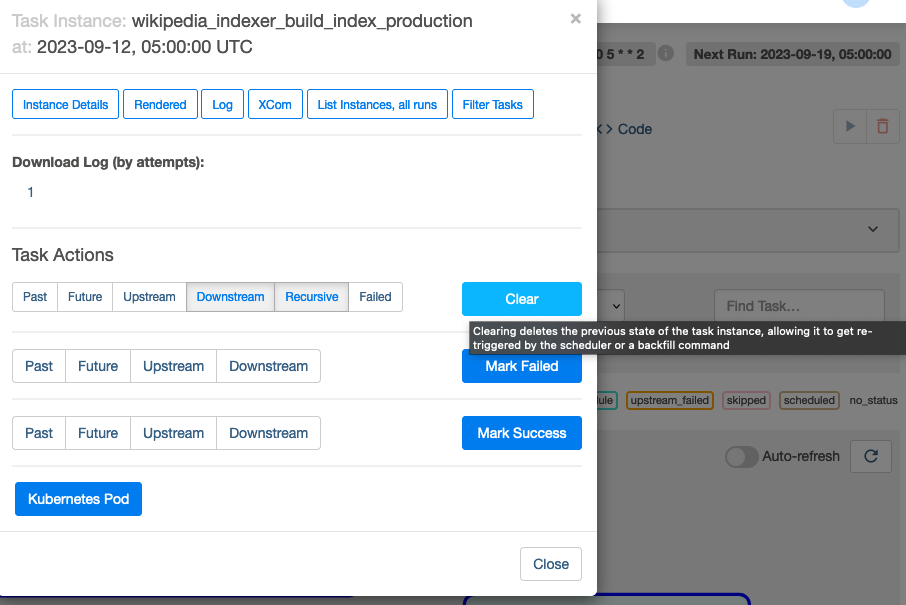

operations/jobs.md - Merino Jobs describes the jobs that are configured in Merino. Indicate where the jobs exist and link to the details for how the jobs are run.

About the Name

This project drives an important part of Firefox's "felt experience". That is, the feeling of using Firefox, hopefully in a delightful way. The word "felt" in this phrase refers to feeling, but it can be punned to refer to the textile. Felt is often made of wool, and Merino wool (from Merino sheep) produces exceptionally smooth felt.

Architecture

flowchart TD

subgraph Firefox["fa:fa-firefox-browser Firefox"]

NewTab

UrlBar

end

subgraph NewTab["fa:fa-plus New Tab"]

CuratedRecommendations("Curated Recommendations")

WeatherWidget("Weather Widget")

end

subgraph UrlBar["fa:fa-magnifying-glass Url Bar"]

online("Online Search and Suggest")

offline("Offline Search and Suggest<br>fetches adMarketplace, static Wikipedia, <br>and other suggestions.<br> Offline mode is fallback if Merino times out.")

end

subgraph middleware["fa:fa-layer-group Middleware"]

Geolocation["Geolocation"]

Logging["Logging"]

UserAgent["UserAgent"]

Metrics["Metrics"]

end

subgraph suggestProviders["fa:fa-truck Suggest Providers"]

admProvider("adm")

amoProvider("amo")

geolocationProvider("geolocation")

toppicksProvider("top-picks")

weatherProvider("weather")

wikipediaProvider("wikipedia")

financeProvider("finance")

end

subgraph suggestBackends["fa:fa-microchip Suggest Backends"]

remoteSettingsBackend("remote settings")

accuweatherBackend("accuweather")

elasticBackend("elastic")

toppicksBackend("top picks")

dynamicAmoBackend("dynamic addons")

polygonBackend("polygon")

end

subgraph curatedRecommendationsBackends["fa:fa-microchip Curated Recommendations Backends"]

corpusBackend("corpus")

extendedExpirationCorpusBackend("corpus extended expiration")

gcsEngagementBackend("gcs engagement")

fakespotBackend("fakespot")

gcsPriorBackend("gcs prior")

end

subgraph Merino["fa:fa-server Merino"]

srh("fa:fa-gears Suggest Request Handler")

crh("fa:fa-gears Curated Recommendations Handler")

mrh("fa:fa-gears Manifest Handler")

middleware

maxmind[("fa:fa-database MaxmindDB")]

suggestProviders

curatedRecommendationsProvider["fa:fa-truck Curated Recommendations Provider"]

manifestProvider["fa:fa-truck Manifest Provider"]

suggestBackends

curatedRecommendationsBackends

manifestBackend["Manifest Backend"]

end

subgraph Jobs["fa:fa-rotate Airflow (Merino Jobs)"]

wikipediaSyncJob("Wikipedia Sync")

toppicksSyncJob("Top Picks Sync")

end

User[\"fa:fa-user User"/] -- Accessing the Firefox URL bar --> Firefox

online -- /api/v1/suggest --> srh

CuratedRecommendations -- "/api/v1/curated-recommendations" --> crh

manifest["manifest"] -- /api/v1/manifest --> mrh

WeatherWidget --> srh

srh -..- middleware

crh -..- middleware

mrh -..- middleware

srh --> suggestProviders

crh --> curatedRecommendationsProvider

mrh --> manifestProvider

curatedRecommendationsProvider --> curatedRecommendationsBackends

manifestProvider --> manifestBackend

admProvider --> remoteSettingsBackend

amoProvider --> dynamicAmoBackend

toppicksProvider --> toppicksBackend

weatherProvider --> accuweatherBackend

wikipediaProvider --> elasticBackend

financeProvider --> polygonBackend

polygonBackend --> polygonApi("fa:fa-globe Polygon API")

Geolocation --> maxmind

dynamicAmoBackend --> addonsAPI("fa:fa-globe Addons API")

elasticBackend --> elasticSearch[("Elasticsearch")]

manifestBackend -..-> toppicksData[("fa:fa-database GCS Top Picks Data,<br>a list of Mozilla curated popular sites and metadata to be <br>displayed on browser")]

toppicksSyncJob -..-> toppicksData

accuweatherBackend -..-> accuweatherAPI("fa:fa-globe Accuweather API")

accuweatherAPI -. tries to query cache first ..-> redis[("fa:fa-memory Redis Cache")]

gcsEngagementBackend --> gcsMerinoAirflowData[("fa:fa-database GCS Merino Airflow Data")]

gcsPriorBackend --> gcsMerinoAirflowData

fakespotBackend --> gcsFakespotNewTabProducts[("fa:fa-database GCS Fakespot NewTab Products")]

corpusBackend -..-> curatedCorpusAPI("fa:fa-globe Curated Corpus API")

offline -..- kinto[("Remote Settings")]

remoteSettingsBackend --- merinoRustExtension("fa:fa-puzzle-piece Merino Rust Extension")

merinoRustExtension --> kinto

wikipediaSyncJob -. Syncs Wikipedia entries weekly ..- elasticSearch

Merino API documentation

This page describes the API endpoints available on Merino.

The autogenerated API documentation exists here.

Working with the Manifest endpoint

Overview

The /manifest endpoint returns a curated list of websites with associated metadata. This endpoint is designed to be used as part of your development process to maintain an up-to-date copy of website favicons.

Endpoint details

- URL:

https://merino.services.mozilla.com/api/v1/manifest - Method:

GET - Response:

JSON

{

"domains": [

{

"rank": 1,

"domain": "google",

"categories": [

"Search Engines"

],

"serp_categories": [

0

],

"url": "https://www.google.com/",

"title": "Google",

"icon": ""

},

{

"rank": 2,

"domain": "microsoft",

"categories": [

"Business",

"Information Technology"

],

"serp_categories": [

0

],

"url": "https://www.microsoft.com/",

"title": "Microsoft – AI, Cloud, Productivity, Computing, Gaming & Apps",

"icon": "https://merino-images.services.mozilla.com/favicons/90cdaf487716184e4034000935c605d1633926d348116d198f355a98b8c6cd21_17174.oct"

}

]

}

The icon field has the url of the Mozilla-hosted favicon of the website.

Usage

- You can save the JSON response as a

manifest.jsonfile:

curl https://merino.services.mozilla.com/api/v1/manifest -o manifest.json

Or, if you have jq installed on your system, you can pretty-print it:

curl -s https://merino.services.mozilla.com/api/v1/manifest | jq '.' > manifest.json

- Check it into your repository and ship it with the application you are building.

- Whenever you need to display a favicon for a website or URL, you can check the

Manifestfile and use theiconfield as a link to the favicon.

Add custom domains

You are also able to add custom domains to this endpoint. We currently run a weekly cron job to collect favicons from the Top 2000 websites. Adding custom domains is handled via this Python file in the Merino codebase: https://github.com/mozilla-services/merino-py/blob/main/merino/jobs/navigational_suggestions/custom_domains.py

To add yours:

git clone git@github.com:mozilla-services/merino-py.git- Add a new entry to the

CUSTOM_DOMAINSlist withurland at least onecategory: https://github.com/mozilla-services/merino-py/blob/main/merino/jobs/navigational_suggestions/custom_domains.py - Create a PR against the

merino-pyrepo with your changes

The custom domains will be picked up during the next run (every Wednesday). This job can also be run manually by following instructions here.

Add custom favicons

Some websites block automated scrapers or have unreliable favicon detection. For these domains, you can specify a direct favicon URL that will be used instead of attempting to scrape it.

What are custom favicons?

Custom favicons are pre-defined favicon URLs stored in merino/jobs/navigational_suggestions/enrichments/custom_favicons.py. When the job processes a domain, it checks this file first before attempting to scrape the favicon. This ensures reliable favicon delivery for domains that would otherwise fail.

Where is this file used?

The CUSTOM_FAVICONS dictionary is imported and used by the domain processor (processing/domain_processor.py) during the favicon extraction phase. If a domain is found in the custom favicons mapping, the job will:

- Download the specified favicon URL

- Upload it to the CDN

- Skip the web scraping step entirely for that domain's favicon

Adding custom favicons with the CLI (Recommended)

The easiest way to add custom favicons is using the probe-images command-line tool. This tool will automatically test a domain, find the best favicon, and save it to the CUSTOM_FAVICONS dictionary.

Basic usage:

# Test a domain and save its best favicon

probe-images example.com --save

With options:

# Specify minimum favicon width (default is 32px)

probe-images example.com --save --min-width 64

# Test multiple domains at once

probe-images example.com mozilla.org github.com --save

What the CLI does:

When you run probe-images with the --save flag, it will:

- Scrape the domain and extract all available favicons

- Apply the same selection logic used in production to find the best favicon

- Automatically update

merino/jobs/navigational_suggestions/enrichments/custom_favicons.py - Add the domain (without TLD) as the key and the best favicon URL as the value

Example output:

Testing domain: mozilla.org

✅ Success!

Title Internet for people, not profit — Mozilla Global

Best Icon https://www.mozilla.org/media/img/favicons/mozilla/favicon-196x196.png

Total Favicons 5

All favicons found:

- https://www.mozilla.org/media/img/favicons/mozilla/apple-touch-icon.png (rel=apple-touch-icon size=180x180)

- https://www.mozilla.org/media/img/favicons/mozilla/favicon-196x196.png (rel=icon size=196x196)

- https://www.mozilla.org/media/img/favicons/mozilla/favicon.ico (rel=shortcut,icon)

Save Results:

Saved Domain mozilla

Saved URL https://www.mozilla.org/media/img/favicons/mozilla/favicon-196x196.png

Save PATH merino/jobs/navigational_suggestions/enrichments/custom_favicons.py

Summary: 1/1 domains processed successfully

Adding custom favicons manually

You can also manually edit the CUSTOM_FAVICONS dictionary if you already know the favicon URL:

git clone git@github.com:mozilla-services/merino-py.git- Edit

merino/jobs/navigational_suggestions/enrichments/custom_favicons.py - Add a new entry to the

CUSTOM_FAVICONSdictionary:

CUSTOM_FAVICONS: dict[str, str] = {

"axios": "https://static.axios.com/icons/favicon.svg",

"espn": "https://a.espncdn.com/favicon.ico",

"yoursite": "https://yoursite.com/path/to/favicon.png", # Add your domain here

# ...

}

Important notes:

- Use the second-level domain name without the TLD (e.g., use

"mozilla"not"mozilla.org") - Use the direct URL to the favicon file

- Ensure the URL is publicly accessible and won't break over time

- Create a PR against the

merino-pyrepo with your changes

The custom favicons will be used during the next job run (every Wednesday), or can be triggered manually.

Configuring Firefox and Merino Environments

Merino has been enabled by default in Firefox. Though, you will need to enable

the data sharing for Firefox Suggest to fully enable the feature. To enable it,

type about:config in the URL bar set the Firefox preference

browser.urlbar.quicksuggest.dataCollection.enabled to true. By default,

Merino will connect to the production environments. This is controlled with the

browser.urlbar.merino.endpointURL preference. See below for other options.

You can also query any of the endpoint URLs below with something like:

curl 'https://merino.services.allizom.org/api/v1/suggest?q=your+query'

Environments

Production

Endpoint URL: https://merino.services.mozilla.com/api/v1/suggest

The primary environment for end users. Firefox is configured to use this by default.

Stage

Endpoint URL: https://merino.services.allizom.org/api/v1/suggest

This environment is used for manual and load testing of the server. It is not guaranteed to be stable or available. It is used as a part of the deploy process to verify new releases before they got to production.

Data collection

This page should list all metrics and logs that Merino is expected to emit in production, including what should be done about them, if anything.

Logs

This list does not include any DEBUG level events, since those are not logged

by default in production. The level and type of the log is listed.

Any log containing sensitive data must include a boolean field sensitive

that is set to true to exempt it from flowing to the generally accessible

log inspection interfaces.

Merino APIs

-

INFO web.suggest.request- A suggestion request is being processed. This event will include fields for all relevant details of the request. Fields:sensitive- Always set to true to ensure proper routing.query- If query logging is enabled, the text the user typed. Otherwise an empty string.country- The country the request came from.region- The first country subdivision the request came from.city- The city the request came from.dma- A US-only location description that is larger than city and smaller than states, but does not align to political borders.agent- The original user agent.os_family- Parsed from the user agent. One of "windows", "macos", "linux", "ios", "android", "chrome os", "blackberry", or "other".form_factor- Parsed from the user agent. One of "desktop", "phone", "tablet", or "other"browser- The browser and possibly version detected. Either "Firefox(XX)" where XX is the version, or "Other".rid- The request ID.- WIP

accepts_english- True if the user's Accept-Language header includes an English locale, false otherwise. requested_providers- A comma separated list of providers requested via the query string, or an empty string if none were requested (in which case the default values would be used).client_variants- Any client variants sent to Merino in the query string.session_id- A UUID generated by the client for each search session.sequence_no- A client-side event counter (0-based) that records the query sequence within each search session.

ERROR dockerflow.error_endpoint- The__error__endpoint of the server was called. This is used to test our error reporting system. It is not a cause for concern, unless we receive a large amount of these records, in which case some outside service is likely malicious or misconfigured.

Merino Middleware Logs

Geolocation

WARNING merino.middleware.geolocation- There was an error with a geolocation lookup.

Merino Cron Tasks

WARNING merino.cron- There was an error while executing a cron task.

Merino Feature Flags

ERROR merino.featureflags- There was an error while attempting to assign a feature flag for a suggest API request.

Curated Recommendations

ERROR merino.curated_recommendations.corpus_backends.corpus_api_backend- Failed to get timezone for scheduled surface.WARNING merino.curated_recommendations.corpus_backends.corpus_api_backend- Retrying CorpusApiBackend after an http client exception was raised.ERROR GcsEngagement failed to update cache: {e}- unexpected exception when updating engagement.ERROR Curated recommendations engagement size {blob.size} > {self.max_size}- Max engagement blob size is exceeded. The backend will gracefully fall back to cached data or 0's.INFO Curated recommendations engagement unchanged since {self.last_updated}.- The engagement blob was not updated since the last check.last_updatedis expected to be between 0 and 30 minutes.

Metrics

A note on timers: Statsd timers are measured in milliseconds, and are reported as integers (at least in Cadence). Milliseconds are often not precise enough for the tasks we want to measure in Merino. Instead, we use generic histograms to record microsecond times. Metrics recorded in this way should have

-usappended to their name, to mark the units used (since we shouldn't put the proper unit μs in metric names).

-

merino.providers.initialize- A timer to measure the overall initialization duration (in ms) for all providers. -

merino.providers.initialize.<provider>- A timer to measure the initialization duration (in ms) for the given<provider>.Example:

merino.providers.initialize.adm -

merino.<http_method>.<url_path>.status_codes.<status_code>- A counter to measure the status codes of an HTTP method for the<url_path>.Example:

merino.get.api.v1.suggest.status_codes.200 -

merino.<http_method>.<url_path>.timing- A timer to measure the duration (in ms) of an HTTP method for a URL path.Example:

merino.get.api.v1.suggest.timing -

merino.<provider_module>.query- A timer to measure the query duration (in ms) of a certain suggestion provider.Example:

merino.providers.suggest.adm.query -

merino.<provider_module>.query.timeout- A counter to measure the query timeouts of a certain suggestion provider.Example:

merino.providers.suggest.wikipedia.query.timeout -

merino.suggestions-per.request- A histogram metric to get the distribution of suggestions per request. -

merino.suggestions-per.provider.<provider_module>- A histogram metric to get the distribution of suggestions returned per provider (per request).Example:

merino.suggestions-per.provider.wikipedia

AccuWeather

The weather provider records additional metrics.

accuweather.upstream.request.<request_type>.get- A counter to measure the number of times an upstream request to Accuweather was made.accuweather.request.location.not_provided- A counter to measure the number of times a query was send without a location being provided, and therefore unable to process a weather request. Sampled at 75%.accuweather.request.location.dist_calculated.success- A counter to measure the number of successful lat long distance calculations used to find location.accuweather.request.location.dist_calculated.fail- A counter to measure the number of failed lat long distance calculations used to find location.merino.providers.accuweather.query.cache.fetch- A timer to measure the duration (in ms) of looking up a weather report in the cache. Sampled at 75%.merino.providers.accuweather.query.cache.fetch.miss.locations- A counter to measure the number of times weather location was not in the cache. Sampled at 75%.merino.providers.accuweather.query.cache.fetch.miss.currentconditions- A counter to measure the number of times a current conditions was not in the cache. Sampled at 75%.merino.providers.accuweather.query.cache.fetch.miss.forecasts- A counter to measure the number of times a forecast for a location was not in the cache. Sampled at 75%.merino.providers.accuweather.query.cache.fetch.hit.{locations | currentconditions | forecasts}- A counter to measure the number of times a requested value like a location or forecast is in the cache. We don't count TTL hits explicitly, just misses. Sampled at 75%.merino.providers.accuweather.query.backend.get- A timer to measure the duration (in ms) of a request for a weather report from the backend. This metric isn't recorded for cache hits. Sampled at 75%.merino.providers.accuweather.query.cache.store- A timer to measure the duration (in ms) of saving a weather report from the backend to the cache. This metric isn't recorded for cache hits. Sampled at 75%.merino.providers.accuweather.query.cache.error- A counter to measure the number of times the cache store returned an error when fetching or storing a weather report. This should be 0 in normal operation. In case of an error, the logs will include aWARNINGwith the full error message.merino.providers.accuweather.query.weather_report- A counter to measure the number of queries that are for weather, origin of the request is recorded in tags.merino.providers.accuweather.skip_cities_mapping.total.size- A counter to measure the total number of occurrences cities were skipped due to no locationmerino.providers.accuweather.skip_cities_mapping.unique.size- A counter to measure the number of unique cities that are skipped due to no location

Curated Recommendations

The following additional metrics are recorded when curated recommendations are requested.

corpus_api.{get_sections | scheduled_surface}.timing- A timer to measure the duration (in ms) of making a request to the Corpus API.corpus_api.{get_sections | scheduled_surface}.status_codes.{res.status_code}- A counter to measure the status codes of an HTTP request to the curated-corpus-api.corpus_api.{get_sections | scheduled_surface}.graphql_error- A counter to measure the number of GraphQL errors from the curated-corpus-api.recommendation.engagement.update.timing- A timer to measure the duration (in ms) of updating the engagement data from GCS.recommendation.engagement.size- A gauge to track the size of the engagement blob on GCS.recommendation.engagement.count- A gauge to measure the total number of engagement records.recommendation.engagement.{country}.count- A gauge to measure the number of scheduled corpus items with engagement data per country.recommendation.engagement.{country}.clicks- A gauge to measure the number of clicks per country in our GCS engagement blob.recommendation.engagement.{country}.impressions- A gauge to measure the number of impressions per country in our GCS engagement blob.recommendation.engagement.last_updated- A gauge for the staleness (in seconds) of the engagement data, measured between when the data was updated in GCS and the current time.recommendation.prior.update.timing- A timer to measure the duration (in ms) of updating the prior data from GCS.recommendation.prior.size- A gauge to track the size of the Thompson sampling priors blob on GCS.recommendation.prior.last_updated- A gauge for the staleness (in seconds) of the prior data, measured between when the data was updated in GCS and the current time.recommendation.ml.contextual.update.timing- A timer to measure the duration (in ms) of updating the engagement data from GCS.recommendation.ml.contextual.size- A gauge to track the size of the engagement blob on GCS.recommendation.ml.contextual.last_updated- A gauge for the staleness (in seconds) of the contextual engagement data, measured between when the data was updated in GCS and the current time.recommendation.ml.cohort_model.update.timing- A timer to measure the duration (in ms) of updating the cohort model data from GCS.recommendation.ml.cohort_model.size- A gauge to track the size of the cohort model blob on GCS.recommendation.ml.cohort_model.last_updated- A gauge for the staleness (in seconds) of the cohort model data, measured between when the data was updated in GCS and the current time. This value could get large as the model may be updated weekly.

Manifest

When requesting a manifest file, we record the following metrics.

manifest.request.get- A counter for how many requests against the/manifestendpoint where made.manifest.request.timing- A timer for how long it took the endpoint to fulfill the request.manifest.gcs.fetch_time- A timer for how long it took to download the latest manifest file from the Google Cloud bucket.manifest.request.no_manifest- A counter to measure how many times we didn't find the latest manifest file.manifest.request.error- A counter to measure how many times we could not provide a valid JSON manifest file.manifest.invalid_icon_url- A counter to measure how many requests the Manifest provider gets for domains where we don't have a valid icon Url (with tags).

Service Governance

The following metrics are recorded for service governance monitoring.

governance.circuits.<circuit-breaker-name>- A gauge to instrument the failure count for each "open" circuit breaker.

Merino Developer Guidelines and Social Contract

This is an additional contractual document on top of CONTRIBUTING.

Foster a Shared Ownership

Not only do Merino developers build the service together, they also share the ownership of the service. That ownership is embodied in the following responsibilities:

- Be responsible for the entire lifecycle of each change landed in the code base: from writing the PR and getting it merged; ensuring it goes through CI/CD and eventually deployed to production; setting up monitoring on metrics and ensuring its healthy status and the overall health of Merino.

- Be familiar with Merino’s operation. Conduct operational reviews on a regular basis. Identify and track operational issues. Coordinate with the team(s) to close action items and resolve the identified issues.

- Documentation. Make sure the code meets the documentation requirements (no linting errors). If a change adds/updates the API, logs or metrics, ensure the associated documentation is up to date.

We commit to sharing knowledge about Merino across the team, with the long-term goal that each team member is capable of resolving incidents of Merino. Merino developers should familiarize themselves with the Mozilla Incident Response Process and the Merino Runbooks. Each individual should be able to initiate an incident response, serve as the incident handling manager, and drive it to its resolution along with other incident responders. Any issues associated with an incident should be tracked in Jira in a way the team agrees upon. For example, assigned with an ‘incident-action-items’ label.

- Be aware of the infrastructure costs associated with new functionality. The team should have a good understanding of the cost to run the service including logging, computing, networking, and storage costs.

- Be mindful of work hours and the time zones of your fellow developers when scheduling meetings, deploying code, pairing on code, or collaborating in other ways. Set your work hours in Google Calendar and configure Slack to receive notifications only during those times. We encourage code deployments when there are fellow developers online to support. If you must deploy off-hours, ensure you have a peer available to approve any potential rollbacks.

We are not going to grow individual Merino developers in deployment, operation, documentation, and incident responding for Merino. Rather, we’d like to foster a shared ownership with shared knowledge in every aspect of the day-to-day job for Merino.

Use ADRs to Record Architectural Decisions

ADRs (Architectural Decision Record) are widely adopted by teams at Mozilla to capture important architecture decisions, including their context and consequences. Developers are encouraged to exercise the ADR process to facilitate the decision making on important subjects of the project. ADRs should be made easy to access and reference and therefore are normally checked into the source control and rendered as part of the project documentation.

Use SLO and Error Budget to Manage Service Risks

We strive to build highly available and reliable services while also emphasizing rapid iteration and continuous deployment as key aspects of product development. We opt to use SLOs (Service Level Objective) and error budget for risk management. SLOs can be co-determined by the product owner(s) and the service builders & operators. The error budget should be monitored and enforced by the monitoring infrastructure. Once the budget is reached, the service owners should be more reluctant or even reject to accept risky code artifacts until the budget gets reset.

Request RRA for New Content Integrations

RRA (Rapid Risk Assessment) is the recommended process for service builders to perform a standardized lightweight risk assessment for the service or the feature of interest. Since Merino is a user-facing consumer service, we shall take extra caution for user security and the related risks. We have agreed with the Security Assurance team that we’d request an RRA (by following the RRA instructions) for every new content integration (e.g. AccuWeather) or content storage (e.g. Elasticsearch) for Merino.

Testing for Productivity & Reliability

We value testing as a mechanism of establishing feedback loops for service development, design, and release. As developers add new changes to the project, thorough and effective testing reduces uncertainty and generates short feedback loops, accelerating development, release, and regression resolution. Testing also helps reduce the potential decrease in reliability from each change. To materialize those merits for Merino, we have designed the Merino Test Strategy and fulfilled it with adequate tests. We anticipate the cross-functional team to adhere to the strategy and evolve it to better support the project over time.

Aim for Simplicity

We prioritize simple and conventional solutions in all aspects of development, from system design, to API specs, to code. We prefer mature, battle-tested technologies over complex, cutting-edge alternatives. At the same time, we know that Merino can always get better, and we welcome ideas from everyone. If you’ve got a new approach in mind, share it with the team or propose an Architectural Decision Record (ADR).

Blame-free Culture

While we strive to make Merino a highly reliable service, things would still go wrong regardless of how much care we take. Code errors, misconfigurations, operational glitches, to name a few. We opt for a blame-free culture to ease the mental stress when individuals are encouraged to take on more activities & responsibilities, especially before they gain familiarity around the tasks. We believe that learning from mistakes and incorporating the learned experience into processes to avoid repeating the same mistakes is more constructive and useful than putting someone on center stage. With a blame-free culture and proper risk management processes in place, the average cost of failures should be more tolerable within the error budget boundary. Who would be afraid of making mistakes?

Have Fun

Last but not least. Let’s make Merino a fun project to work with!

Developer documentation for working on Merino

tl;dr

Here are some useful commands when working on Merino.

Run the main app

This project uses uv for dependency management. See dependencies for how to install uv on your machine.

Install all the dependencies:

$ uv sync --all-groups

Run Merino:

$ uv run fastapi run merino/main.py --reload

# Or you can use a shortcut

$ make run

# To run in hot reload mode

$ make dev

General commands

# List all available make commands with descriptions

$ make help

$ make install

# Run linter

$ make ruff-lint

# Run format checker

$ make ruff-fmt

# Run formatter

$ make ruff-format

# Run black

$ make black

# Run bandit

$ make bandit

# Run mypy

$ make mypy

# Run all linting checks

$ make -k lint

# Run all formatters

$ make format

# Run merino-py with the auto code reloading

$ make dev

# Run merino-py without the auto code reloading

$ make run

# Run unit and integration tests and evaluate combined coverage

$ make test

# Evaluate combined unit and integration test coverage

$ make test-coverage-check

# Run unit tests

$ make unit-tests

# List fixtures in use per unit test

$ make unit-test-fixtures

# Run integration tests

$ make integration-tests

# List fixtures in use per integration test

$ make integration-test-fixtures

# Build the docker image for Merino named "app:build"

$ make docker-build

# Run local execution of (Locust) load tests

$ make load-tests

# Stop and remove containers and networks for load tests

$ make load-tests-clean

# Generate documents

$ make doc

# Preview the generated documents

$ make doc-preview

# Profile Merino with Scalene

$ make profile

# Run the Wikipedia CLI job

$ make wikipedia-indexer job=$JOB

Documentation

You can generate documentation, both code level and book level, for Merino and

all related crates by running ./dev/make-all-docs.sh. You'll need mdbook

and mdbook-mermaid, which you can install via:

make doc-install-deps

If you haven't installed Rust and Cargo, you can reference the official Rust document.

Local configuration

The default configuration of Merino is development, which has human-oriented

pretty-print logging and debugging enabled. For settings that you wish to change in the

development configuration, you have two options, listed below.

For full details, make sure to check out the documentation for Merino's setting system (operations/configs.md).

Update the defaults

Dynaconf is used for all configuration management in Merino, where

values are specified in the merino/configs/ directory in .toml files. Environment variables

are set for each environment as well and can be set when using the cli to launch the

Merino service.

Environment variables take precedence over the values set in the .toml files, so

any environment variable set will automatically override defaults. By the same token,

any config file that is pointed to will override the merino/configs/default.toml file.

If the change you want to make makes the system better for most development

tasks, consider adding it to merino/configs/development.toml, so that other developers

can take advantage of it. If you do so, you likely want to add validation to those settings

which needs to be added in merino/config.py, where the Dynaconf instance exists along

with its validators. For examples of the various config settings, look at configs/default.toml

and merino/config.py to see an example of the structure.

It is not advisable to put secrets in configs/secrets.toml.

Create a local override

Dynaconf will use the specified values and environment variables in the

merino/configs/default.toml file. You can change the environment you

want to use as mentioned above, but for local changes to adapt to your

machine or tastes, you can put the configuration in merino/configs/development.local.toml.

This file doesn't exist by default, so you will have to create it.

Then simply copy from the other config files and make the adjustments

that you require. These files should however not be checked into source

control and are configured to be ignored, so long as they follow the *.local.toml

format. Please follow this convention and take extra care to not check them in

and only use them locally.

See the Dynaconf Documentation for more details.

Content Moderation and Blocklists

This summarizes the mechanisms that block sensitive or questionable content in Merino. Because Merino supports several providers that have a broad range of potential suggestions, often from different sources, we require the ability to remove certain suggestions from being displayed.

Blocklists in Merino filter content at two distinct phases:

-

Content that is filtered at the data creation and indexing phase. Provider backends serve suggestions to the client based on matching against searched terms. This ensures that data that could be sensitive is not available to search against since it is not indexed. For instance, the Wikipedia provider filters categories of articles that are tagged with a matching category term in the blocklist.

-

Content that is filtered at application runtime. There are instances where we want to quickly and dynamically add to block lists without re-indexing or running a job. In this case, suggestions are compared to a static list in the code that blocks out these suggestions.

Navigational Suggestions / Top Picks

In the Navigational Suggestions provider, a blocklist is used during data creation to block specific domains of websites that we do not want to suggest.

The blocklist, domain_blocklist.json, is referenced during data generation of the top_picks.json file, which is ingested by the provider backend. This ensures specific domains are not indexed for suggestions. The blocklist is loaded and an exact string comparison is made between all second-level domains and the second-level domains defined in the blocklist.

See nav-suggestions blocklist runbook for more information.

Wikipedia

The Wikipedia Provider does both title filtering and category filtering at the data indexing level.

Since the indexing jobs run periodically, we also implemented title filtering in the provider to get the blocking out sooner.

Indexer

The Wikipedia Indexer Job references a remote blocklist which contains sensitive categories. At job runtime, the indexer reads the remote blocklist and creates a set of article categories that are be excluded from indexing.

The article categories in the blocklist are chosen based off of analysis and best guesses of what could be considered objectionable content, based off of Mozilla's values and brand image. Any modifications to the file should be done with careful consideration.

The indexer also blocks titles that are defined in the WIKIPEDIA_TITLE_BLOCKLIST in the application, which is referenced below. Any title that matches this blocklist is excluded from indexing.

Provider

When queried, the Wikipedia provider reads the WIKIPEDIA_TITLE_BLOCKLIST when creating a WikipediaSuggestion and if the query matches a blocked title, the suggestion is not shown to the client.

We have this feature because the indexing job is not run daily. Therefore, we desire having an option to rapidly add to this list should we need to block a specific article.

See wikipedia blocklist runbook for more information.

Development Dependencies

Package Dependencies

This project uses uv for dependency management, virtual environment management and running scripts and commands.

While you can use the vanilla virtualenv to set up the dev environment, we highly recommend to check

out uv.

To install uv, run:

$ pipx install uv

Or install via your preferred method.

Feel free to browse the pyproject.toml file for a listing of dependencies and their versions.

First, lets make sure you have a virtual environment set up with the right Python version. This service uses Python 3.13.

$ uv venv

See more about setting up virtual envs and Python version with uv.

Once uv is installed, and a virtual environment is created with the correct Python version, install all the dependencies:

$ uv sync --all-groups

Add packages to project via uv

$ uv add <package_name>

After that you should be to run Merino as follows:

$ uv run fastapi run merino/main.py --reload

Moving from the Poetry & Pyenv Set up

If you had your environment previously set up via poetry and pyenv, here are the steps to move to uv. This would be a nice clean up and reset.

# Remove your previous virtual environment. If you set up using pyenv, then:

rm .python-version

pyenv uninstall merino-py

# Uninstall pyenv

rm -rf $(pyenv root)

# or if you installed it via your OS package manager

brew uninstall pyenv

Service Dependencies

Merino uses a Redis-based caching system, and so requires a Redis instance to

connect to. In addition, a GCS (GCP Cloud Storage) emulator, fake-gcs-server,

is also provided to facilitate local development and testing.

To make things simple, all these service dependencies can be started with Docker

Compose, using the docker-compose.yaml file in the dev/ directory.

Notably, this does not run any Merino components that have source

code in this repository.

# Run this at the Merino's project root

$ docker compose -f dev/docker-compose.yaml up

# Or run services in deamon mode

$ docker compose -f dev/docker-compose.yaml up -d

# Stop it

$ docker compose -f dev/docker-compose.yaml down

# Shortcuts are also provided

$ make docker-compose-up

$ make docker-compose-up-daemon

$ make docker-compose-down

Redis

Two Redis servers (primary & replica) are listening on ports 6379 and 6380,

and can be connected via redis://localhost:6379 and redis://localhost:6380,

respectively.

This Dockerized set up is optional. Feel free to run the dependent services by any other means as well.

GCS Emulator

The GCS emulator is listening on port 4443 and ready for both read and write

operations. Make sure you set a environment variable STORAGE_EMULATOR_HOST=http://localhost:4443

so that Merino's GCS clients can connect to it. For example,

$ STORAGE_EMULATOR_HOST=http://localhost:4443 make run

Optionally, you can create a GCS bucket and preload data into it. The preloaded

data is located in dev/local_data/gcs_emulator/. Say if you want to preload

a JSON file top_picks_latest.json into a bucket merino-images-prodpy, you

can create a new sub-directory merino-images-prody in gcs_emulator and then

create or copy top_picks_latest.json into it. Then you can set Merino's

configurations to use those artifacts in the GCS emulator.

# File layout of the preloaded GCS data

dev/local_data

└── gcs_emulator

└── merino-images-prodpy <- GCS Bucket ID

└── top_picks_latest.json <- A preloaded GCS blob

Note that dev/local_data will not be checked into the source control nor the

docker image of Merino.

Dev Helpers

The docker-compose setup also includes some services that can help during development.

- Redis Commander, http://localhost:8081 - Explore the Redis database started above.

Logging and Metrics

To get data out of Merino and into observable systems, we use metrics and logging. Each has a unique use case. Note that in general, because of the scale we work at, adding a metric or log event in production is not free, and if we are careless can end up costing quite a bit. Record what is needed, but don't go over board.

All data collection that happens in production (logging at INFO, WARN, or ERROR

levels; and metrics) should be documented in docs/data.md.

Logging

Merino uses MozLog for structured logging. Logs can be recorded through the

standard Python logging module. Merino can output logs in various formats,

including a JSON format (MozLog) for production. A pretty, human readable format

is also provided for development and other use cases.

Types

MozLog requires that all messages have a type value. By convention, we use

the name of the Python module, where the log record get issued, to populate this

field. For example:

import logging

logger = logging.getLogger(__name__)

# The `type` field of the log record will be the same as `__name__`.

logger.info("A new log message", data=extra_fields)

In general, the log message ("An empty MultiProvider was created") and the log type should both tell the reader what has happened. The difference is that the message is for humans and the type is for machines.

Levels

Tracing provides five log levels that should be familiar. This is what we mean by them in Merino:

-

CRITICAL- There was a serious error indicating that the program itself may be unable to continue running. -

ERROR- There was a problem, and the task was not completable. This usually results in a 500 being sent to the user. All error logs encountered in production are reported to Sentry and should be considered a bug. If it isn't a bug, it shouldn't be logged as an error. -

WARNING- There was a problem, but the task was able to recover. This doesn't usually affect what the user sees. Warnings are suitable for unexpected but "in-spec" issues, like a sync job not returning an empty set or using a deprecated function. These are not reported to Sentry. -

INFO- This is the default level of the production service. Use for logging that something happened that isn't a problem and we care about in production. This is the level that Merino uses for it's one-per-request logs and sync status messages. Be careful adding new per-request logs at this level, as they can be expensive. -

DEBUG- This is the default level for developers running code locally. Use this to give insight into how the system is working, but keep in mind that this will be on by default, so don't be too noisy. Generally this should summarize what's happening, but not give the small details like a log line for every iteration of a loop. Since this is off in production, there are no cost concerns.

Metrics

Merino metrics are reported as Statsd metrics.

Unlike logging, the primary way that metrics reporting can cost a lot is in cardinality. The number of metric IDs we have and the combination of tag values that we supply. Often the number of individual events doesn't matter as much, since multiple events are aggregated together.

Middlwares

Merino leverages middleware for various functionalities such as logging, metrics,

parsing for geolocation & user agent, feature flags etc. Middleware is defined

in the merino/middleware directory.

Caveat

We currently don't implement middleware using the middleware facilities provided by FastAPI/Starlette as they've shown significant performance overhead, preventing Merino from achieving the SLOs required by Firefox Suggest.

Before those performance issues get resolved in the upstream, we will be implementing

middleware for Merino through the ASGI protocol. You can also reference this

tutorial to learn more about ASGI. See Starlette's middleware document

for more details about how to write pure ASGI middlewares. Specifically, we can reuse

Starlette's data structures (Request, Headers, QueryParams etc.) to facilitate

the implementation.

Feature Flags

Usage

Do you plan to release code behind a feature flag? Great! 😃

Your feature flag needs to be defined first. If it's already defined, go ahead. Otherwise check the configuration section below before you continue.

Use the following line in API endpoint code to gain access to the feature flags object:

feature_flags: FeatureFlags = request.scope[ScopeKey.FEATURE_FLAGS]

Then check whether a certain feature flag, such as example, is enabled by calling:

if feature_flags.is_enabled("example"):

print("feature flag 'example' is enabled! 🚀")

When you do that, the decision (whether the feature flag is enabled or not) is

recorded and stored in a dict on the decisions attribute of the feature

flags object.

Implementation

The feature flags system in Merino consists of three components:

| Description | Location |

|---|---|

A FastAPI middleware that reads the query parameter sid sent by the client application and sets a session ID for the current request based on that. | merino/middleware/featureflags.py |

A FeatureFlags class which you can use to check if a certain feature flag is enabled. | merino/featureflags.py |

| A local directory containing static files that define and configure feature flags for Merino. | merino/configs/flags/ |

Configuration

Currently two bucketing schemes are supported: random and session.

Random

Random does what it says on the tin. It generates a random bucketing ID for every flag check.

Session

Session bucketing uses the session ID of the request as the bucketing key so that feature checks within a given search session would be consistent.

Fields

Each flag defines the following fields:

[default.flags.<flag_name>]

scheme = 'session'

enabled = 0.5

| Field | Description |

|---|---|

scheme | This is the bucketing scheme for the flag. Allowed values are 'random' and 'session' |

enabled | This represents the % enabled for the flag and must be a float between 0 and 1 |

Metrics

When submitting application metrics, feature flag decisions that were made while processing the current request up to this point are automatically added as tags to the emitted metrics.

The format of these tags is:

feature_flag.<feature_flag_name>

For more information about this see the ClientMeta meta class and the

add_feature_flags decorator in merino/metrics.py.

Monitoring in Grafana

Because feature flag decisions are automatically added as tags to emitted metrics, you can use them in your queries in Grafana. 📈

For example, if you want to group by decisions for a feature flag with name

hello_world, you can use tag(feature_flag.hello_world) in GROUP BY in

Grafana. You can also use [[tag_feature_flag.hello_world]] in the ALIAS for

panel legends.

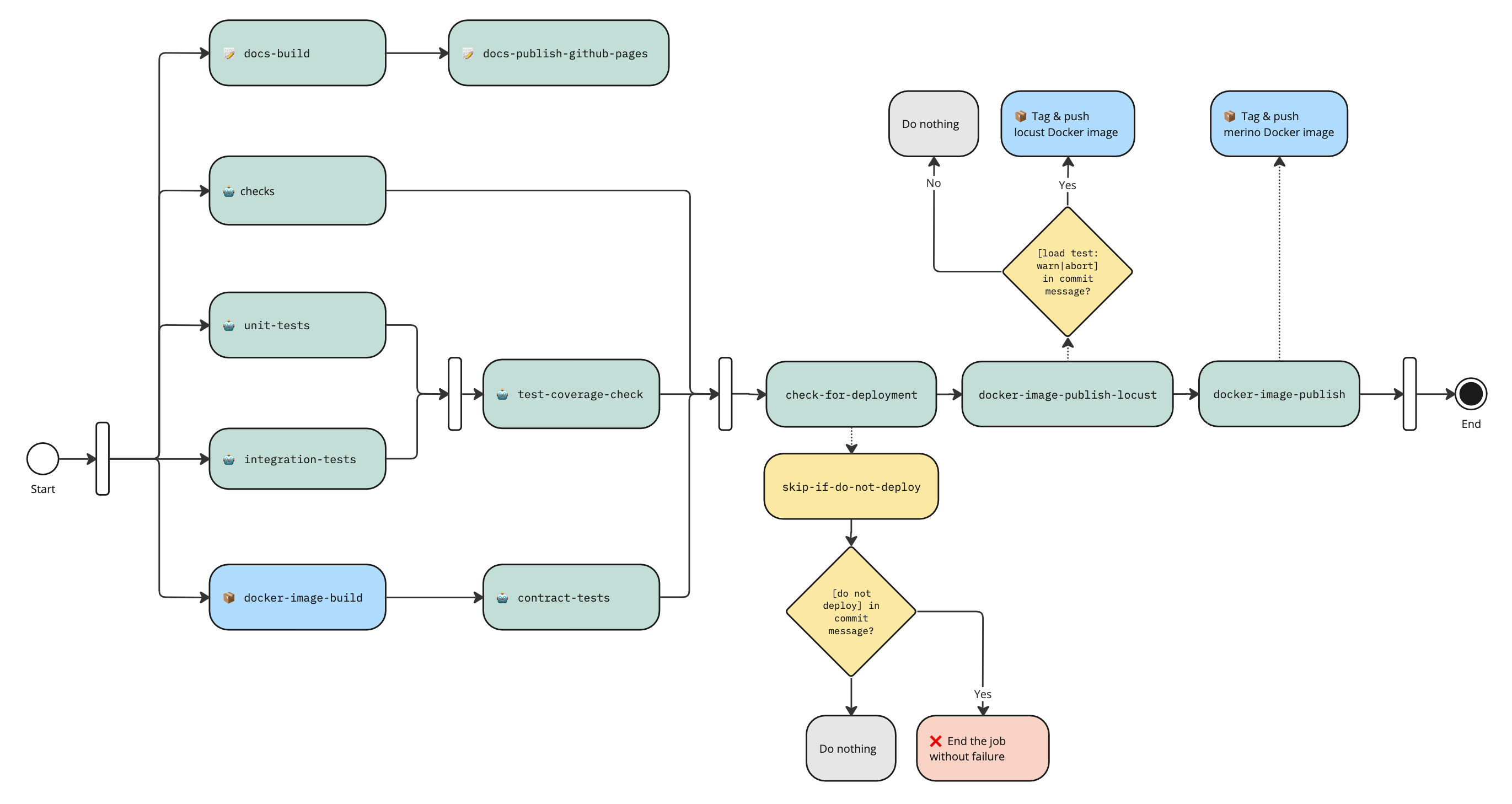

The Release Process

This project currently follows a Continuous Deployment process.

Whenever a commit is pushed to this repository's main branch, a CircleCI workflow is triggered

which performs code checks and runs automated tests. The workflow additionally builds a new Docker

image of the service and pushes that Docker image to the Docker Hub registry (this requires all

previous jobs to pass).

Pushing a new Docker image to the Docker Hub registry triggers a webhook that starts the Jenkins

deployment pipeline (the Docker image tag determines the target environment). The deployment

pipeline first deploys to the stage environment and subsequently to the

production environment.

After the deployment is complete, accessing the __version__ endpoint will show

the commit hash of the deployed version, which will eventually match to the one of the latest commit

on the main branch (a node with an older version might still serve the request before it is shut

down).

Release Best Practices

The expectation is that the author of the change will:

- merge pull requests during hours when the majority of contributors are online

- monitor the [Merino Application & Infrastructure][merino_app_info] dashboard for any anomaly

Versioning

The commit hash of the deployed code is considered its version identifier. The commit hash can be

retrieved locally via git rev-parse HEAD.

Load Testing

Load testing can be performed either locally or during the deployment process. During deployment, load tests are run against the staging environment before Merino-py is promoted to production.

Load tests in continuous deployment are controlled by adding a specific label to the commit message

being deployed. The format for the label is [load test: (abort|skip|warn)]. Typically, this label

is added to the merge commit created when a GitHub pull request is integrated.

abort: Stops the deployment if the load test fails.skip: Skips load testing entirely during deployment.warn: Proceeds with the deployment even if the load test fails, but sends a warning notification through Slack.

If no label is included in the commit message, the default behavior is to run the load test and issue a warning if it fails.

For more detailed information about load testing procedures and conventions, please refer to the Load Test README.

Logs from load tests executed in continuous deployment are available in the /data volume of the

Locust master kubernetes pod.

What to do if production breaks?

If your latest release causes problems and needs to be rolled back: don't panic and follow the instructions in the Rollback Runbook.

What to do if tests fail during deployment?

Please refer to What to do with Test Failures in CI?

Profiling

As Merino runs as a single-threaded application using the asyncio-based framework, it would be useful for engineers to get a good understanding about how Merino performs and where it spends time and memory doing what tasks to serve the requests. Local profiling offers us a way to look into those low-level details.

We use Scalene as the profiler to conduct the profiling for Merino. It's very easy to use, offers extremely detailed (at the line level) insights with much lower overhead compared to other profilers.

Usage

To start the profiling, you can run the following to start Merino with Scalene:

$ make profile

# or you can run it directly

$ python -m scalene merino/main.py

Then you can send requests to Merino manually or through using other load testing tools. Once that's done, you can terminate the Merino application. It will automatically collect profiling outputs (CPU & Memory) and open it in your browser.

Understand the outputs

Out of the box, Scalene provides a very intuitive web interface to display the profiling outputs. It's organized at the file (module) level. For each file, it shows the CPU time and average memory usage for both the line profile and the function profile of that module. You can also click on specific columns to sort the lines or functions accordingly.

For more details of how to read the outputs, you can reference Scalene's documents.

Equipped with those insights, you can have a good understanding about the application, identify hotspots, bottlenecks, or other findings that are not easy to uncover by only reading the source code. And then, you can tweak or fix those issues, test or profile it again to verify if the fix is working.

Merino Testing

Test Strategy

Merino is tested using a combination of functional and performance tests.

Test code resides in the tests directory.

Merino's test strategy requires that we do not go below a minimum test coverage percentage for unit and integration tests. Load tests cannot go below a minimum performance threshold.

Functional tests push test artifacts, in the form of JUnit XMLs and Coverage JSONs to the ETE Test Metric Pipeline for trending and monitoring. Results can be found on the Merino-py Test Metrics Looker Dashboard.

Test documentation resides in the /docs/testing/ directory.

The test strategy is three-tiered, composed of:

See documentation and repositories in each given test area for specific details on running and maintaining tests.

Unit Tests

The unit layer is suitable for testing complex behavior at a small scale, with fine-grained control over the inputs. Due to their narrow scope, unit tests are fundamental to thorough test coverage.

To execute unit tests, use: make unit-tests

Unit tests are written and executed with pytest and are located in the tests/unit directory,

using the same organizational structure as the source code of the merino service.

Type aliases dedicated for test should be stored in the types.py module.

The conftest.py modules contain common utilities in fixtures.

For a breakdown of fixtures in use per test, use: make unit-test-fixtures

Fixtures

Available fixtures include:

FilterCaplogFixture

Useful when verifying log messages, this fixture filters log records captured with

pytest's caplog by a given logger_name.

Usage:

def test_with_filter_caplog(

caplog: LogCaptureFixture, filter_caplog: FilterCaplogFixture

) -> None:

records: list[LogRecord] = filter_caplog(caplog.records, "merino.providers.suggest.adm")

Note: This fixture is shared with integration tests.

SuggestionRequestFixture

For use when querying providers, this fixture creates a SuggestionRequest object with

a given query

Usage:

def test_with_suggestion_request(srequest: SuggestionRequestFixture) -> None:

request: SuggestionRequest = srequest("example")

result: list[BaseSuggestion] = await provider.query(request)

ScopeFixture, ReceiveMockFixture & SendMockFixture

For use when testing middleware, these fixtures initialize or mock the common Scope, Receive and Send object dependencies.

Usage:

def test_middleware(scope: Scope, receive_mock: Receive, send_mock: Send) -> None:

pass

Integration Tests

The integration layer of testing allows for verification of interactions between service components, with lower development, maintenance and execution costs compared with higher level tests.

To execute integration tests, make sure you have Docker installed and a docker daemon running. Then use: make integration-tests

Integration tests are located in the tests/integration directory.

They use pytest and the FastAPI TestClient to send requests to specific merino endpoints and verify responses as well as other outputs, such as logs.

Tests are organized according to the API path under test.

Type aliases dedicated for test should be stored in the types.py module.

Fake providers created for test should be stored in the fake_providers.py module.

The conftest.py modules contain common utilities in fixtures.

We have also added integration tests that use Docker via the testcontainers library. See fixture example below.

For a breakdown of fixtures in use per test, use: make integration-test-fixtures

Fixtures

Available fixtures include:

FilterCaplogFixture

Details available in Unit Tests section

TestClientFixture

This fixture creates an instance of the TestClient to be used in testing API calls.

Usage:

def test_with_test_client(client: TestClient):

response: Response = client.get("/api/v1/endpoint")

TestClientWithEventsFixture

This fixture creates an instance of the TestClient, that will trigger event handlers

(i.e. startup and shutdown) to be used in testing API calls.

Usage:

def test_with_test_client_with_event(client_with_events: TestClient):

response: Response = client_with_events.get("/api/v1/endpoint")

InjectProvidersFixture & ProvidersFixture

These fixture will setup and teardown given providers.

Usage:

If specifying providers for a module:

@pytest.fixture(name="providers")

def fixture_providers() -> Providers:

return {"test-provider": TestProvider()}

If specifying providers for a test:

@pytest.mark.parametrize("providers", [{"test-provider": TestProvider()}])

def test_with_provider() -> None:

pass

SetupProvidersFixture

This fixture sets application provider dependency overrides.

Usage:

def test_with_setup_providers(setup_providers: SetupProvidersFixture):

providers: dict[str, BaseProvider] = {"test-provider": TestProvider()}

setup_providers(providers)

TeardownProvidersFixture

This fixture resets application provider dependency overrides and is often used in teardown fixtures.

Usage:

@pytest.fixture(autouse=True)

def teardown(teardown_providers: TeardownProvidersFixture):

yield

teardown_providers()

TestcontainersFixture

See tests/integration/jobs/navigational_suggestions/test_domain_metadata_uploader.py for a detailed example.

This is a lightweight example on how to set up a docker container for your integration tests.

Usage:

@pytest.fixture(scope="module")

def your_docker_container() -> DockerContainer:

os.environ.setdefault("STORAGE_EMULATOR_HOST", "http://localhost:4443")

container = (

DockerContainer("your-docker-image")

.with_command("-scheme http")

.with_bind_ports(4443, 4443)

).start()

# wait for the container to start and emit logs

delay = wait_for_logs(container, "server started at")

port = container.get_exposed_port(4443)

yield container

container.stop()

Merino Load (Locust) Tests

This documentation describes the load tests for Merino. This test framework uses IP2Location LITE data available from https://lite.ip2location.com

Overview

The tests in the tests/load directory spawn multiple HTTP clients that consume Merino's API,

in order to simulate real-world load on the Merino infrastructure.

These tests use the Locust framework and are triggered at the discretion of the Merino Engineering Team.

Related Documentation

Local Execution

Note that if you make changes to the load test code, you must stop and remove the Docker containers and networks for changes to reflect.

Do this by running make load-tests-clean.

Follow the steps bellow to execute the load tests locally:

Setup Environment

1. Configure Environment Variables

The following environment variables as well as

Locust environment variables can be set in

tests\load\docker-compose.yml.

Make sure any required API key is added but then not checked into source control.

WARNING: if the WIKIPEDIA__ES_API_KEY is missing, the load tests will not execute.

| Environment Variable | Node(s) | Description |

|---|---|---|

| LOAD_TESTS__LOGGING_LEVEL | master & worker | Level for the logger in the load tests as an int (10 for DEBUG, 20 for INFO etc.) |

| MERINO_REMOTE_SETTINGS__SERVER | master & worker | Server URL of the Kinto instance containing suggestions |

| MERINO_REMOTE_SETTINGS__BUCKET | master & worker | Kinto bucket with the suggestions |

| MERINO_REMOTE_SETTINGS__COLLECTION | master & worker | Kinto collection with the suggestions |

| MERINO_PROVIDERS__TOP_PICKS__TOP_PICKS_FILE_PATH | master & worker | The minimum character limit set for long domain suggestion indexing |

| MERINO_PROVIDERS__TOP_PICKS__QUERY_CHAR_LIMIT | master & worker | The minimum character limit set for short domain suggestion indexing |

| MERINO_PROVIDERS__TOP_PICKS__FIREFOX_CHAR_LIMIT | master & worker | File path to the json file of domains |

| MERINO_PROVIDERS__WIKIPEDIA__ES_API_KEY | master & worker | The base64 key used to authenticate on the Elasticsearch cluster specified by es_cloud_id |

| MERINO_PROVIDERS__WIKIPEDIA__ES_URL | master & worker | The Cloud ID of the Elasticsearch cluster |

| MERINO_PROVIDERS__WIKIPEDIA__ES_INDEX | master & worker | The index identifier of Wikipedia in Elasticsearch |

2. Host Locust via Docker

Execute the following from the repository root:

make load-tests

3. (Optional) Host Merino Locally

Use one of the following commands to host Merino locally. Execute the following from the repository root:

- Option 1: Use the local development instance

make dev - Option 2: Use the profiler instance

make profile - Option 3: Use the Docker instance

make docker-build && docker run -p 8000:8000 app:build

Run Test Session

1. Start Load Test

- In a browser navigate to

http://localhost:8089/ - Set up the load test parameters:

- Option 1: Select the

MerinoSmokeLoadTestShapeorMerinoAverageLoadTestShape- These options have pre-defined settings

- Option 2: Select the

Defaultload test shape with the following recommended settings:- Number of users: 25

- Spawn rate: 1

- Host: 'https://merino.services.allizom.org'

- Set host to 'http://host.docker.internal:8000' to test against a local instance of Merino

- Duration (Optional): 10m

- Option 1: Select the

- Select "Start Swarming"

2. Stop Load Test

Select the 'Stop' button in the top right hand corner of the Locust UI, after the desired test duration has elapsed. If the 'Run time' is set in step 1, the load test will stop automatically.

3. Analyse Results

- See Distributed GCP Execution (Manual Trigger) - Analyse Results

- Only client-side measures, provided by Locust, are available when executing against a local instance of Merino.

Clean-up Environment

1. Remove Load Test Docker Containers

Execute the following from the repository root:

make load-tests-clean

Distributed GCP Execution - Manual Trigger

Follow the steps bellow to execute the distributed load tests on GCP with a manual trigger:

Setup Environment

1. Start a GCP Cloud Shell

The load tests can be executed from the contextual-services-test-eng cloud shell.

2. Configure the Bash Script

- The

setup_k8s.shfile, located in thetests\loaddirectory, contains shell commands to create a GKE cluster, setup an existing GKE cluster or delete a GKE cluster- Modify the script to include the MERINO_PROVIDERS__WIKIPEDIA__ES_API_KEY environment variables

- Execute the following from the root directory, to make the file executable:

chmod +x tests/load/setup_k8s.sh

3. Create the GCP Cluster

- Execute the

setup_k8s.shfile and select the create option, in order to initiate the process of creating a cluster, setting up the env variables and building the docker image. Choose smoke or average depending on the type of load test required../tests/load/setup_k8s.sh create [smoke|average]- Smoke - The smoke load test verifies the system's performance under minimal load. The test is run for a short period, possibly in CD, to ensure the system is working correctly.

- Average - The average load test measures the system's performance under standard operational conditions. The test is meant to reflect an ordinary day in production.

- The cluster creation process will take some time. It is considered complete, once

an external IP is assigned to the

locust_masternode. Monitor the assignment via a watch loop:kubectl get svc locust-master --watch - The number of workers is defaulted to 5, but can be modified with the

kubectl scalecommand. Example (10 workers):kubectl scale deployment/locust-worker --replicas=10 - To apply new changes to an existing GCP Cluster, execute the

setup_k8s.shfile and select the setup option.- This option will consider the local commit history, creating new containers and deploying them (see Artifact Registry)

Run Test Session

1. Start Load Test

-

In a browser navigate to

http://$EXTERNAL_IP:8089This url can be generated via command

EXTERNAL_IP=$(kubectl get svc locust-master -o jsonpath="{.status.loadBalancer.ingress[0].ip}") echo http://$EXTERNAL_IP:8089 -

Select the

MerinoSmokeLoadTestShape, this option has pre-defined settings and will last 5 minutes -

Select "Start Swarming"

2. Stop Load Test

Select the 'Stop' button in the top right hand corner of the Locust UI, after the desired test duration has elapsed. If the 'Run time' is set in step 1, the load test will stop automatically.

3. Analyse Results

RPS

- The request-per-second load target for Merino is

1500 - Locust reports client-side RPS via the "merino_stats.csv" file and the UI (under the "Statistics" tab or the "Charts" tab)

- Grafana reports the server-side RPS via the "HTTP requests per second per country" chart

HTTP Request Failures

- The number of responses with errors (5xx response codes) should be

0 - Locust reports Failures via the "merino_failures.csv" file and the UI (under the "Failures" tab or the "Charts" tab)

- Grafana reports Failures via the "HTTP Response codes" chart and the "HTTP 5xx error rate" chart

Exceptions

- The number of exceptions raised by the test framework should be

0 - Locust reports Exceptions via the "merino_exceptions.csv" file and the UI (under the "Exceptions" tab)

Latency

- The HTTP client-side response time (aka request duration) for 95 percent of users

is required to be 200ms or less (

p95 <= 200ms), excluding weather requests - Locust reports client-side latency via the "merino_stats.csv" file and the UI

(under the "Statistics" tab or the "Charts" tab)

- Warning! A Locust worker with too many users will bottleneck RPS and inflate client-side latency measures. Locust reports worker CPU and memory usage metrics via the UI (under the "Workers" tab)

- Grafana reports server-side latency via the "p95 latency" chart

Resource Consumption

- To conserve costs, resource allocation must be kept to a minimum. It is expected that container, CPU and memory usage should trend consistently between load test runs.

- Grafana reports metrics on resources via the "Container Count", "CPU usage time sum" and "Memory usage sum" charts

4. Report Results

- Results should be recorded in the Merino Load Test Spreadsheet

- Optionally, the Locust reports can be saved and linked in the spreadsheet:

- Download the results via the Locust UI or via command:

Thekubectl cp <master-pod-name>:/home/locust/merino_stats.csv merino_stats.csv kubectl cp <master-pod-name>:/home/locust/merino_exceptions.csv merino_exceptions.csv kubectl cp <master-pod-name>:/home/locust/merino_failures.csv merino_failures.csvmaster-pod-namecan be found at the top of the pod list:kubectl get pods -o wide - Upload the files to the ConServ drive and record the links in the spreadsheet

- Download the results via the Locust UI or via command:

Clean-up Environment

1. Delete the GCP Cluster

Execute the setup_k8s.sh file and select the delete option

./tests/load/setup_k8s.sh

Distributed GCP Execution - CI Trigger

The load tests are triggered in CI via Jenkins, which has a command overriding the load test Dockerfile entrypoint.

Follow the steps below to execute the distributed load tests on GCP with a CI trigger:

Run Test Session

1. Execute Load Test

To modify the load testing behavior, you must include a label in your Git commit. This must be the

merge commit on the main branch, since only the most recent commit is checked for the label. The

label format is: [load test: (abort|skip|warn)]. Take careful note of correct syntax and spacing

within the label. There are three options for load tests: abort, skip, and warn:

- The

abortlabel will prevent a prod deployment if the load test fails

Ex.feat: Add feature ABC [load test: abort]. - The

skiplabel will bypass load testing entirely during deployment

Ex.feat: Add feature LMN [load test: skip]. - The

warnlabel will output a Slack warning if the load test fails but still allow for the production deployment

Ex.feat: Add feature XYZ [load test: warn].

If no label is included in the commit message, the load test will be executed with the warn

action.

The commit tag signals load test instructions to Jenkins by modifying the Docker image tag. The

Jenkins deployment workflow first deploys to stage and then runs load tests if requested. The

Docker image tag passed to Jenkins appears as follows:

^(?P<environment>stage|prod)(?:-(?P<task>\w+)-(?P<action>abort|skip|warn))?-(?P<commit>[a-z0-9]+)$

2. Analyse Results

See Distributed GCP Execution (Manual Trigger) - Analyse Results

3. Report Results

- Optionally, results can be recorded in the Merino Load Test Spreadsheet. It is recommended to do so if unusual behavior is observed during load test execution or if the load tests fail.

- The Locust reports can be saved and linked in the spreadsheet. The results are persisted in the

/datadirectory of thelocust-master-0pod in thelocust-masterk8s cluster in the GCP project ofmerino-nonprod. To access the Locust logs:- Open a cloud shell in the Merino stage environment

- Authenticate by executing the following command:

gcloud container clusters get-credentials merino-nonprod-v1 \ --region us-west1 --project moz-fx-merino-nonprod-ee93 - Identify the log files needed in the Kubernetes pod by executing the following command, which

lists the log files along with file creation timestamp when the test was performed. The

{run-id}uniquely identifies each load test run:kubectl exec -n locust-merino locust-master-0 -- ls -al /data/ - Download the results via the Locust UI or via command:

kubectl -n locust-merino cp locust-master-0:/data/{run-id}-merino_stats.csv merino_stats.csv kubectl -n locust-merino cp locust-master-0:/data/{run-id}-merino_exceptions.csv merino_exceptions.csv kubectl -n locust-merino cp locust-master-0:/data/{run-id}-merino_failures.csv merino_failures.csv - Upload the files to the ConServ drive and record the links in the spreadsheet

Calibration

Following the addition of new features, such as a Locust Task or Locust User, or environmental changes, such as node size or the upgrade of a major dependency like the python version image, it may be necessary to re-establish the recommended parameters of the performance test.

| Parameter | Description |

|---|---|

WAIT TIME | - Changing this cadence will increase or decrease the number of channel subscriptions and notifications sent by a MerinoUser. - The default is currently in use for the MerinoUser class. |

TASK WEIGHT | - Changing this weight impacts the probability of a task being chosen for execution. - This value is hardcoded in the task decorators of the MerinoUser class. |

USERS_PER_WORKER | - This value should be set to the maximum number of users a Locust worker can support given CPU and memory constraints. - This value is hardcoded in the LoadTestShape classes. |

WORKER_COUNT | - This value is derived by dividing the total number of users needed for the performance test by the USERS_PER_WORKER. - This value is hardcoded in the LoadTestShape classes and the setup_k8s.sh script. |

- Locust documentation is available for [WAIT TIME][13] and [TASK WEIGHT][14]

Calibrating for USERS_PER_WORKER

This process is used to determine the number of users that a Locust worker can support.

Setup Environment

1. Start a GCP Cloud Shell

The load tests can be executed from the contextual-services-test-eng cloud shell. If executing a load test for the first time, the git merino-py repository will need to be cloned locally.

2. Configure the Bash Script

- The

setup_k8s.shfile, located in thetests\loaddirectory, contains shell commands to create a GKE cluster, setup an existing GKE cluster or delete a GKE cluster- Execute the following from the root directory, to make the file executable:

chmod +x tests/load/setup_k8s.sh

- Execute the following from the root directory, to make the file executable:

3. Create the GCP Cluster

- In the

setup_k8s.shscript, modify theWORKER_COUNTvariable to equal1 - Execute the

setup_k8s.shfile from the root directory and select the create option, in order to initiate the process of creating a cluster, setting up the env variables and building the docker image. Choose smoke or average depending on the type of load test required../tests/load/setup_k8s.sh create [smoke|average] - The cluster creation process will take some time. It is considered complete, once

an external IP is assigned to the

locust_masternode. Monitor the assignment via a watch loop:kubectl get svc locust-master --watch

Calibrate

Repeat steps 1 to 3, using a process of elimination, such as the bisection method, to

determine the maximum USERS_PER_WORKER. The load tests are considered optimized when

CPU and memory resources are maximally utilized. This step is meant to determine the

maximum user count that a node can accommodate by observing CPU and memory usage while

steadily increasing or decreasing the user count. You can monitor the CPU percentage in

the Locust UI but also in the Kubernetes engine Workloads tab where both memory and CPU

are visualized on charts.

1. Start Load Test

- In a browser navigate to

http://$EXTERNAL_IP:8089This url can be generated via commandEXTERNAL_IP=$(kubectl get svc locust-master -o jsonpath="{.status.loadBalancer.ingress[0].ip}") echo http://$EXTERNAL_IP:8089 - Set up the load test parameters:

- ShapeClass: Default

- UserClasses: MerinoUser

- Number of users: USERS_PER_WORKER (Consult the Merino_spreadsheet to determine a starting point)

- Ramp up: RAMP_UP (RAMP_UP = 5/USERS_PER_WORKER)

- Host: 'https://merino.services.allizom.org'

- Duration (Optional): 600s

- Select "Start Swarm"

2. Stop Load Test

Select the 'Stop' button in the top right hand corner of the Locust UI, after the desired test duration has elapsed. If the 'Run time' or 'Duration' is set in step 1, the load test will stop automatically.

3. Analyse Results

CPU and Memory Resource Graphs

- CPU and Memory usage should be less than 90% of the available capacity

- CPU and Memory Resources can be observed in Google Cloud > Kubernetes Engine > Workloads

Log Errors or Warnings

- Locust will emit errors or warnings if high CPU or memory usage occurs during the

execution of a load test. The presence of these logs is a strong indication that the