Merino Jobs Operations

Navigational Suggestions

This document provides instructions and documentation on the navigational suggestions job.

This job creates a file that is ingested by the Top Picks/Navigational Suggestions provider.

The provider indexes a collection of the top 1000 searched domains and generates the top_picks.json file. Then the provider backend can serve suggestions that match query terms that are searched by the client to second-level domains.

If you need to run the navigational suggestions job ad-hoc, the quickest recommended solution is to run it in Airflow, download the top_picks.json file sent by email,

and then merge the new file into the Merino repo with the newly generated one.

If needing to update the blocklist to avoid certain domains and suggestions from being displayed, please see the navigational suggestions blocklist runbook.

Running the job in Airflow

Normally, the job is set as a cron to run at set intervals as a DAG in Airflow. There may be instances you need to manually re-run the job from the Airflow dashboard.

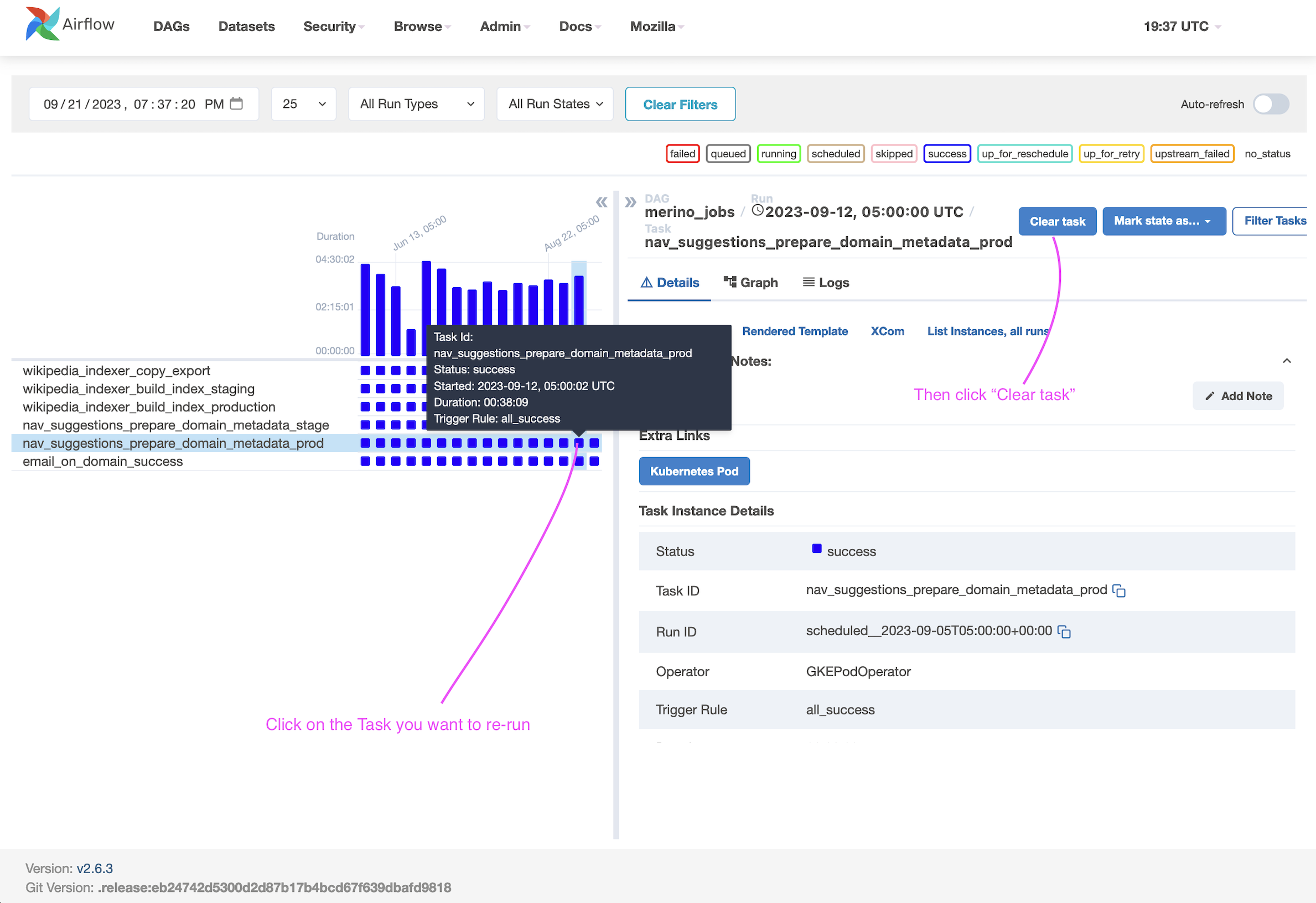

Grid View Tab (Airflow UI)

- Visit the Airflow dashboard for

merino_jobs. - In the Grid View Tab, select the task you want to re-run.

- Click on 'Clear Task' and the executor will re-run the job.

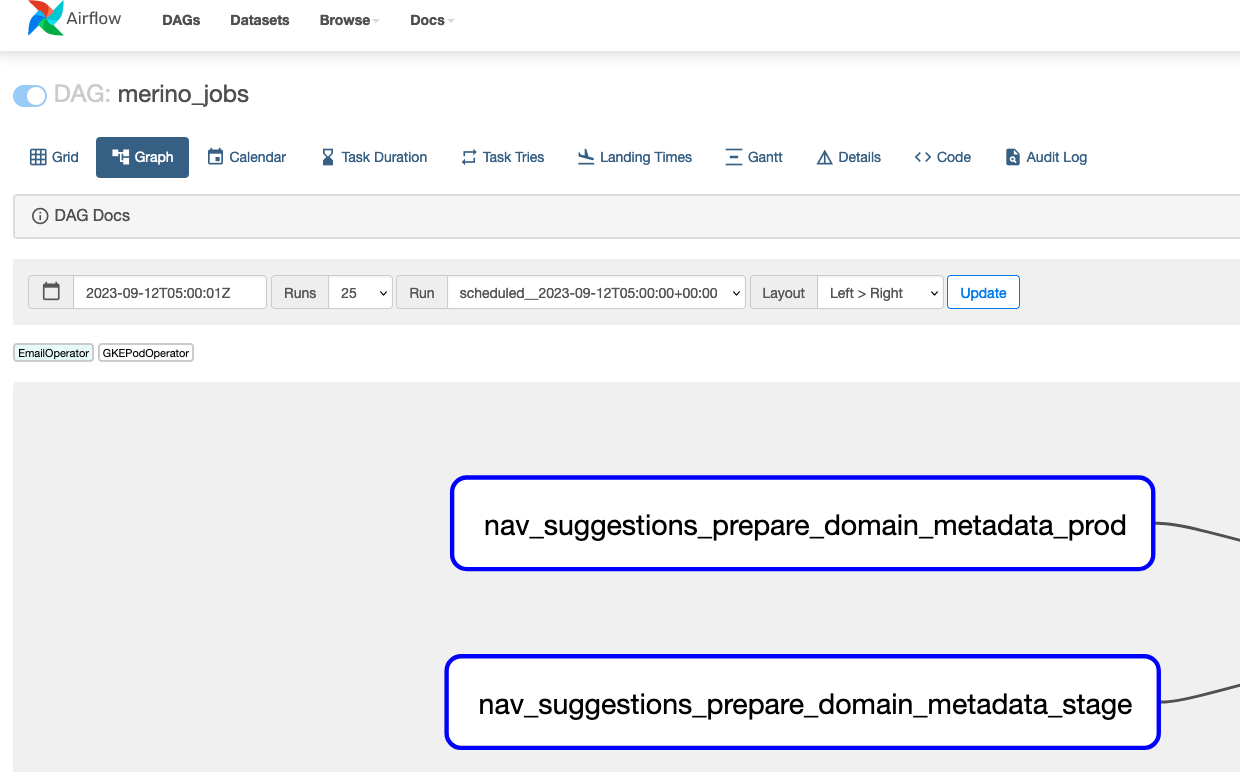

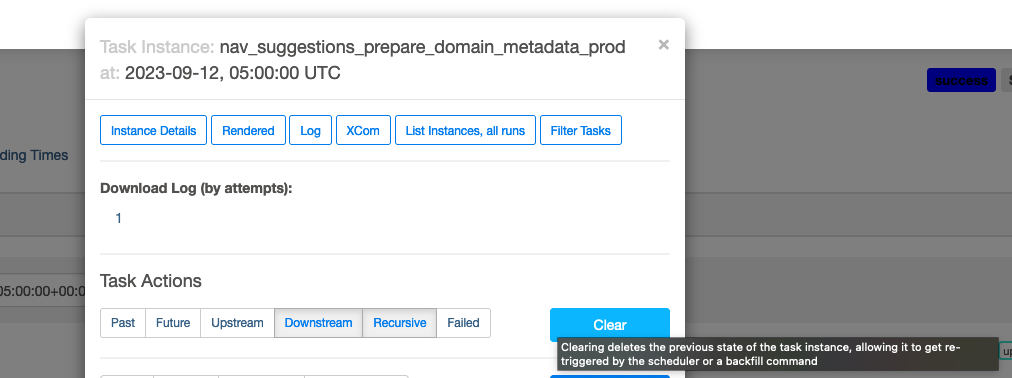

Graph View Tab (Airflow UI) - Alternative

- Visit the Airflow dashboard for

merino_jobs. - From the Graph View Tab, Click on the

nav_suggestions_prepare_domain_metadata_prodtask.

- Click on 'Clear' and the job will re-run.

At the conclusion of the job, you should recieve an email with a link to the newly generated file. Ensure you are a member of the disco-team email distro group to recieve the email.

Note: You can also re-run the stage job, but the changes won't reflect in production. Stage should be re-run in the event of an error before running in prod to verify the correction of an error.

See Airflow's documentation on re-running DAGs for more information and implementation details.

To see the code for the merino_jobs DAG, visit the telemetry-airflow repo. The source for the job is also in the 'code' tab in the airflow console.

To see the navigational suggestions code that is run when the job is invoked, visit Merino jobs/navigational_suggestions.

Running the favicon extractor locally

$ uv run probe-images mozilla.org wikipedia.org

There is a Python script (domain_tester.py) which imports the DomainProcessor, WebScraper and AsyncFaviconDownloader and runs them locally, without saving the results to the cloud.

This is meant to troubleshoot domains locally and iterate over the functionality in a contained environment.

Example output:

$ uv run probe-images mozilla.org wikipedia.org

Testing domain: mozilla.org

✅ Success!

Title Mozilla - Internet for people, not profit (UK)

Best Icon https://dummy-cdn.example.com/favicons/bd67680f7da3564bace91b2be87feab16d5e9e6266355b8f082e21f8159…

Total Favicons 4

All favicons found:

- https://www.mozilla.org/media/img/favicons/mozilla/apple-touch-icon.05aa000f6748.png (rel=apple-touch-icon

size=180x180 type=image/png)

- https://www.mozilla.org/media/img/favicons/mozilla/favicon-196x196.e143075360ea.png (rel=icon size=196x196

type=image/png)

- https://www.mozilla.org/media/img/favicons/mozilla/favicon.d0be64e474b1.ico (rel=shortcut,icon)

Testing domain: wikipedia.org

✅ Success!

Title Wikipedia

Best Icon https://dummy-cdn.example.com/favicons/4c8bf96d667fa2e9f072bdd8e9f25c8ba6ba2ad55df1af7d9ea0dd575c1…

Total Favicons 3

All favicons found:

- https://www.wikipedia.org/static/apple-touch/wikipedia.png (rel=apple-touch-icon)

- https://www.wikipedia.org/static/favicon/wikipedia.ico (rel=shortcut,icon)

Summary: 2/2 domains processed successfully

Adding new domains

- You can add new domains to the job by adding them to the

/merino/jobs/navigational_suggestions/custom_domains.pyfile. - Either manually, or you can use a script inside the

/scriptsfolder. - The script is called

import_domains.shand it works with a CSV file with aREGISTERED_DOMAINheader, and starting from the second row, the firsts column is the domain name (e.ggetpocket.com)

This step was introduced to give the HNT team an easy way of importing their updated domains.

Execute the script like this:

$ ./scripts/import_domains.sh DOWNLOADED_FILE.csv

This will add the domains to the custom_domains.py file, check if the domain exists, and if not, it adds it. Afterwards, all domains are getting alphabetically sorted.

Note

- Subdomains are supported and treated as distinct domains. For example,

sub.example.comis different fromexample.comand can be added separately.- Duplicate checks are done by exact domain string, not by apex/normalized form. If a custom domain exactly matches an existing domain, it will be skipped and logged as:

Skipped duplicate domains: <domain>.

Running the Navigational Suggestions job locally

The Navigational Suggestions job can be run locally for development and testing purposes without requiring access to Google Cloud. This is useful for testing changes to the favicon extraction and domain processing logic.

Prerequisites

- Docker installed and running

- Merino repository cloned locally

Running the job locally

There is one Make command which starts the Docker container for GCS, queries 20 domains, and stops the container afterwards.

$ make nav-suggestions

This will:

- Use a sample of domains from

custom_domains.pyinstead of querying BigQuery - Process these domains through the same extraction pipeline used in production

- Upload favicons and domain metadata to the local GCS emulator

- Generate a local metrics report in the

local_datadirectory

Examples

# With monitoring enabled

make nav-suggestions ENABLE_MONITORING=true

# With custom sample size

make nav-suggestions SAMPLE_SIZE=50

# With custom metrics directory

make nav-suggestions METRICS_DIR=./test_data

# With all options combined

make nav-suggestions SAMPLE_SIZE=30 METRICS_DIR=./test_data ENABLE_MONITORING=true

# Add any other options as needed

make nav-suggestions NAV_OPTS="--min-favicon-width=32"