Merino Jobs Operations

Dynamic Wikipedia Indexer Job

Merino currently builds the Elasticsearch indexing job that runs in Airflow.

Airflow takes the latest image built as the base image.

The reasons to keep the job code close to the application code are:

- Data models can be shared between the indexing job and application more easily. This means that data migrations will be simpler.

- All the logic regarding Merino functionality can be found in one place.

- Eliminates unintended differences in functionality due to dependency mismatch.

If your reason for re-running the job is needing to update the blocklist to avoid certain suggestions from being displayed, please see the wikipedia blocklist runbook.

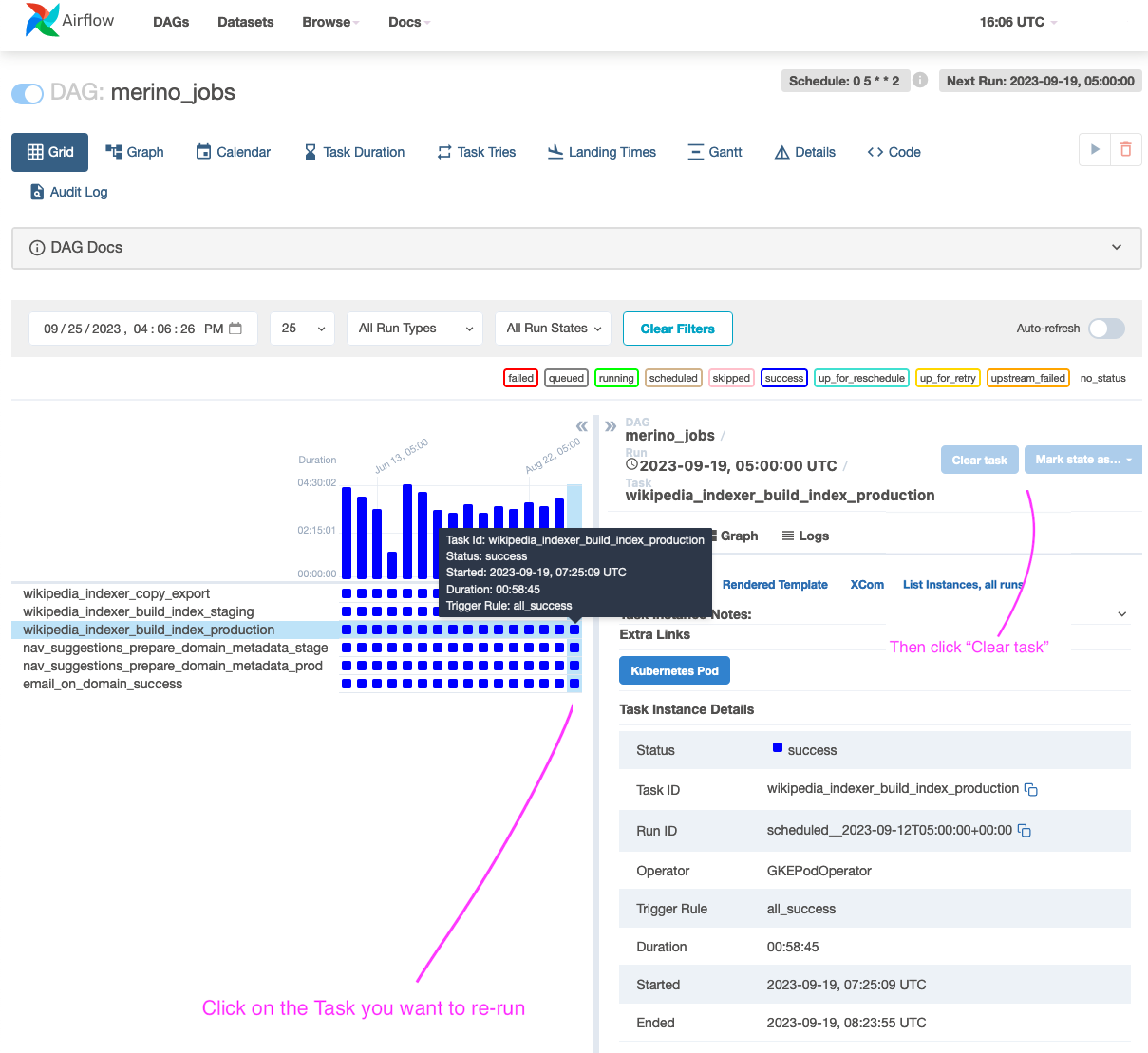

Running the job in Airflow

Normally, the job is set as a cron to run at set intervals as a DAG in Airflow. There may be instances you need to manually re-run the job from the Airflow dashboard.

Grid View Tab (Airflow UI)

- Visit the Airflow dashboard for

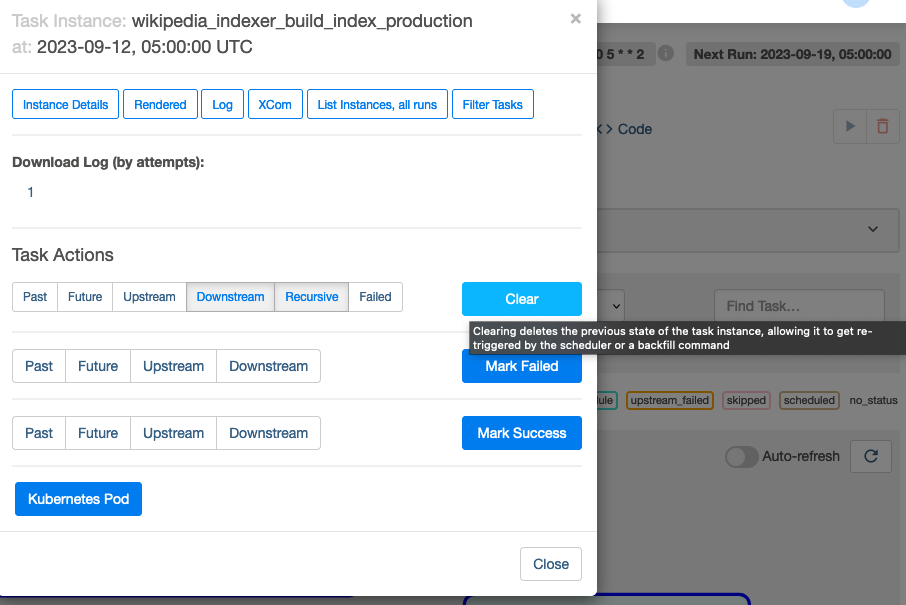

merino_jobs. - In the Grid View Tab, select the task you want to re-run.

- Click on 'Clear Task' and the executor will re-run the job.

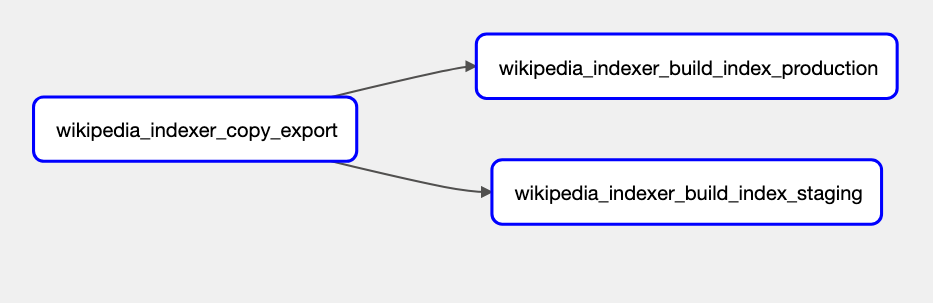

Graph View Tab (Airflow UI) - Alternative

- Visit the Airflow dashboard for

merino_jobs. - From the Graph View Tab, Click on the

wikipedia_indexer_build_index_productiontask.

- Click on 'Clear' and the job will re-run.

Note: You can also re-run the stage job, but the changes won't reflect in production. Stage should be re-run in the event of an error before running in prod to verify the correction of an error.

See Airflow's documentation on re-running DAGs for more information and implementation details.

To see the code for the merino_jobs DAG, visit the telemetry-airflow repo. The source for the job is also in the 'code' tab in the airflow console.

To see the Wikipedia Indexer code that is run when the job is invoked, visit Merino jobs/wikipedia_indexer.