Syncstorage-rs

Mozilla Sync Storage built with Rust. Our documentation is generated using mdBook and published to GitHub Pages.

- System Requirements

- Local Setup

- Logging

- Tests

- Creating Releases

- Troubleshooting

- Related Documentation

System Requirements

- cmake (>= 3.5 and < 3.30)

- gcc

- golang

- libcurl4-openssl-dev

- libssl-dev

- make

- pkg-config

- Rust stable

- python 3.9+

- MySQL 8.0 (or compatible)

- libmysqlclient (

brew install mysqlon macOS,apt install libmysqlclient-devon Ubuntu,apt install libmariadb-dev-compaton Debian)

- libmysqlclient (

Depending on your OS, you may also need to install libgrpcdev,

and protobuf-compiler-grpc. Note: if the code complies cleanly,

but generates a Segmentation Fault within Sentry init, you probably

are missing libcurl4-openssl-dev.

Local Setup

-

Follow the instructions below to use either MySQL or Spanner as your DB.

-

Now

cp config/local.example.toml config/local.toml. Openconfig/local.tomland make sure you have the desired settings configured. For a complete list of available configuration options, check out docs/config.md. -

To start a local server in debug mode, run either:

make run_mysqlif using MySQL or,make run_spannerif using spanner.

The above starts the server in debug mode, using your new

local.tomlfile for config options. Or, simplycargo runwith your own config options provided as env vars. -

Visit

http://localhost:8000/__heartbeat__to make sure the server is running.

MySQL

Durable sync needs only a valid mysql DSN in order to set up connections to a MySQL database. The database can be local and is usually specified with a DSN like:

mysql://_user_:_password_@_host_/_database_

To setup a fresh MySQL DB and user:

- First make sure that you have a MySQL server running, to do that run:

mysqld - Then, run the following to launch a mysql shell

mysql -u root - Finally, run each of the following SQL statements

CREATE USER "sample_user"@"localhost" IDENTIFIED BY "sample_password";

CREATE DATABASE syncstorage_rs;

CREATE DATABASE tokenserver_rs;

GRANT ALL PRIVILEGES on syncstorage_rs.* to sample_user@localhost;

GRANT ALL PRIVILEGES on tokenserver_rs.* to sample_user@localhost;

Note that if you are running MySQL with Docker and encountered a socket connection error, change the MySQL DSN from localhost to 127.0.0.1 to use a TCP connection.

Spanner

Authenticating via OAuth

The correct way to authenticate with Spanner is by generating an OAuth token and pointing your local application server to the token. In order for this to work, your Google Cloud account must have the correct permissions; contact the Ops team to ensure the correct permissions are added to your account.

First, install the Google Cloud command-line interface by following the instructions for your operating system here. Next, run the following to log in with your Google account (this should be the Google account associated with your Mozilla LDAP credentials):

gcloud auth application-default login

The above command will prompt you to visit a webpage in your browser to complete the login process. Once completed, ensure that a file called application_default_credentials.json has been created in the appropriate directory (on Linux, this directory is $HOME/.config/gcloud/). The Google Cloud SDK knows to check this location for your credentials, so no further configuration is needed.

Key Revocation

Accidents happen, and you may need to revoke the access of a set of credentials if they have been publicly leaked. To do this, run:

gcloud auth application-default revoke

This will revoke the access of the credentials currently stored in the application_default_credentials.json file. If the file in that location does not contain the leaked credentials, you will need to copy the file containing the leaked credentials to that location and re-run the above command. You can ensure that the leaked credentials are no longer active by attempting to connect to Spanner using the credentials. If access has been revoked, your application server should print an error saying that the token has expired or has been revoked.

Authenticating via Service Account

An alternative to authentication via application default credentials is authentication via a service account. Note that this method of authentication is not recommended. Service accounts are intended to be used by other applications or virtual machines and not people. See this article for more information.

Your system administrator will be able to tell you which service account keys have access to the Spanner instance to which you are trying to connect. Once you are given the email identifier of an active key, log into the Google Cloud Console Service Accounts page. Be sure to select the correct project.

- Locate the email identifier of the access key and pick the vertical dot menu at the far right of the row.

- Select “Create Key” from the pop-up menu.

- Select “JSON” from the Dialog Box.

A proper key file will be downloaded to your local directory. It’s important to safeguard that key file. For this example, we’re going to name the file

service-account.json.

The proper key file is in JSON format. An example file is provided below, with private information replaced by “...”

{

"type": "service_account",

"project_id": "...",

"private_key_id": "...",

"private_key": "...",

"client_email": "...",

"client_id": "...",

"auth_uri": "https://accounts.google.com/o/oauth2/auth",

"token_uri": "https://oauth2.googleapis.com/token",

"auth_provider_x509_cert_url": "https://www.googleapis.com/oauth2/v1/certs",

"client_x509_cert_url": "..."

}

Note that the name service-account.json must be exactly correct to be ignored by .gitignore.

Connecting to Spanner

To point to a GCP-hosted Spanner instance from your local machine, follow these steps:

- Authenticate via either of the two methods outlined above.

- Open

local.tomland replacedatabase_urlwith a link to your spanner instance. - Open the Makefile and ensure you’ve correctly set you

PATH_TO_GRPC_CERT. make run_spanner.- Visit

http://localhost:8000/__heartbeat__to make sure the server is running.

Note, that unlike MySQL, there is no automatic migrations facility. Currently, the Spanner schema must be hand edited and modified.

Emulator

Google supports an in-memory Spanner emulator, which can run on your local machine for development purposes. You can install the emulator via the gcloud CLI or Docker by following the instructions here. Once the emulator is running, you’ll need to create a new instance and a new database.

Quick Setup Using prepare-spanner.sh

The easiest way to set up a Spanner emulator database is to use the prepare-spanner.sh script:

SYNC_SYNCSTORAGE__SPANNER_EMULATOR_HOST=localhost:9020 ./scripts/prepare-spanner.sh

This script will automatically:

- Create a test instance (

test-instance) on a test project (test-project) - Create a test database (

test-database) with the schema fromschema.ddl - Apply all DDL statements to set up the database structure

The script looks for schema.ddl in either the current directory or in syncstorage-spanner/src/. Make sure the SYNC_SYNCSTORAGE__SPANNER_EMULATOR_HOST environment variable points to your emulator’s REST API endpoint (typically localhost:9020).

After running the script, make sure that the database_url config variable in your local.toml file reflects the created database (i.e. spanner://projects/test-project/instances/test-instance/databases/test-database).

To run an application server that points to the local Spanner emulator:

SYNC_SYNCSTORAGE__SPANNER_EMULATOR_HOST=localhost:9010 make run_spanner

Manual Setup Using curl

If you prefer to manually create the instance and database, or need custom project/instance/database names, you can use the REST API directly. The Spanner emulator exposes a REST API on port 9020. To create an instance, use curl:

curl --request POST \

"localhost:9020/v1/projects/$PROJECT_ID/instances" \

--header 'Accept: application/json' \

--header 'Content-Type: application/json' \

--data "{\"instance\":{\"config\":\"emulator-test-config\",\"nodeCount\":1,\"displayName\":\"Test Instance\"},\"instanceId\":\"$INSTANCE_ID\"}"

Note that you may set PROJECT_ID and INSTANCE_ID to your liking. To create a new database on this instance, you’ll need to include information about the database schema. Since we don’t have migrations for Spanner, we keep an up-to-date schema in src/db/spanner/schema.ddl. The jq utility allows us to parse this file for use in the JSON body of an HTTP POST request:

DDL_STATEMENTS=$(

grep -v ^-- schema.ddl \

| sed -n 's/ \+/ /gp' \

| tr -d '\n' \

| sed 's/\(.*\);/\1/' \

| jq -R -s -c 'split(";")'

)

This command:

- Filters out SQL comments (lines starting with

--) - Normalizes whitespace

- Removes newlines to create a single line

- Removes the trailing semicolon from the concatenated string

- Splits the DDL statements back into an array using

jq

Finally, to create the database:

curl -sS --request POST \

"localhost:9020/v1/projects/$PROJECT_ID/instances/$INSTANCE_ID/databases" \

--header 'Accept: application/json' \

--header 'Content-Type: application/json' \

--data "{\"createStatement\":\"CREATE DATABASE \`$DATABASE_ID\`\",\"extraStatements\":$DDL_STATEMENTS}"

Note that, again, you may set DATABASE_ID to your liking. Make sure that the database_url config variable in your local.toml file reflects your choice of project name, instance name, and database name (i.e. it should be of the format spanner://projects/<your project ID here>/instances/<your instance ID here>/databases/<your database ID here>).

To run the application server that points to the local Spanner emulator:

SYNC_SYNCSTORAGE__SPANNER_EMULATOR_HOST=localhost:9010 make run_spanner

Running via Docker

This requires access to Google Cloud Rust (raw) crate. Please note that due to interdependencies, you will need to ensure that grpcio and protobuf match the version used by google-cloud-rust-raw.

-

Make sure you have Docker installed locally.

-

Copy the contents of mozilla-rust-sdk into top level root dir here.

-

Comment out the

imagevalue undersyncserverin either docker-compose.mysql.yml or docker-compose.spanner.yml (depending on which database backend you want to run), and add this instead:build: context: . -

If you are using MySQL, adjust the MySQL db credentials in docker-compose.mysql.yml to match your local setup.

-

make docker_start_mysqlormake docker_start_spanner- You can verify it’s working by visiting localhost:8000/__heartbeat__

Connecting to Firefox

This will walk you through the steps to connect this project to your local copy of Firefox.

- Follow the steps outlined above for running this project using MySQL or Spanner.

- In Firefox, go to

about:config. Changeidentity.sync.tokenserver.uritohttp://localhost:8000/1.0/sync/1.5. - Restart Firefox. Now, try syncing. You should see new BSOs in your MySQL or Spanner instance.

Logging

Sentry:

- If you want to connect to the existing Sentry project for local development, login to Sentry, and go to the page with api keys. Copy the

DSNvalue. - Comment out the

human_logsline in yourconfig/local.tomlfile. - You can force an error to appear in Sentry by adding a

panic!into main.rs, just before the finalOk(()). - Now,

SENTRY_DSN={INSERT_DSN_FROM_STEP_1_HERE} make run. - You may need to stop the local server after it hits the panic! before errors will appear in Sentry.

RUST_LOG

We use env_logger: set the RUST_LOG env var.

The logging of non-Spanner SQL queries is supported in non-optimized builds via RUST_LOG=syncserver=debug.

Tests

Unit tests

You’ll need nextest and llvm-cov installed for full unittest and test coverage.

$ cargo install cargo-nextest --locked

$ cargo install cargo-llvm-cov --locked

make test- Runs all testsmake test_with_coverage- This will usellvm-covto run tests and generate source-based code coverage

If you need to override SYNC_SYNCSTORAGE__DATABASE_URL or SYNC_TOKENSERVER__DATABASE_URL variables, you can modify them in the Makefile or by setting them in your shell:

$ echo 'export SYNC_SYNCSTORAGE__DATABASE_URL="mysql://sample_user:sample_password@localhost/syncstorage_rs"' >> ~/.zshrc

$ echo 'export SYNC_TOKENSERVER__DATABASE_URL="mysql://sample_user:sample_password@localhost/tokenserver?rs"' >> ~/.zshrc

Debugging unit test state

In some cases, it is useful to inspect the mysql state of a failed test. By default, we use the diesel test_transaction functionality to ensure test data is not committed to the database. Therefore, there is an environment variable which can be used to turn off test_transaction.

SYNC_SYNCSTORAGE__DATABASE_USE_TEST_TRANSACTIONS=false make test ARGS="[testname]"

Note that you will almost certainly want to pass a single test name. When running the entire test suite, data from previous tests will cause future tests to fail.

To reset the database state between test runs, drop and recreate the database in the mysql client:

drop database syncstorage_rs; create database syncstorage_rs; use syncstorage_rs;

End-to-End tests

Functional tests live in server-syncstorage and can be run against a local server, e.g.:

-

If you haven’t already followed the instructions here to get all the dependencies for the server-syncstorage repo, you should start there.

-

Install (Python) server-syncstorage:

$ git clone https://github.com/mozilla-services/server-syncstorage/

$ cd server-syncstorage

$ make build

-

Run an instance of syncstorage-rs (

cargo runin this repo). -

To run all tests:

$ ./local/bin/python syncstorage/tests/functional/test_storage.py http://localhost:8000#<SOMESECRET>

- Individual tests can be specified via the

SYNC_TEST_PREFIXenv var:

$ SYNC_TEST_PREFIX=test_get_collection \

./local/bin/python syncstorage/tests/functional/test_storage.py http://localhost:8000#<SOMESECRET>

Creating Releases

- Switch to master branch of syncstorage-rs

git pullto ensure that the local copy is up-to-date.git pull origin masterto make sure that you’ve incorporated any changes to the master branch.git diff origin/masterto ensure that there are no local staged or uncommited changes.- Bump the version number in Cargo.toml (this new version number will be designated as

<version>in this checklist) - create a git branch for the new version

git checkout -b release/<version> cargo build --release- Build with the release profile release mode.clog -C CHANGELOG.md- Generate release notes. We’re using clog for release notes. Add a-p,-mor-Mflag to denote major/minor/patch version, ieclog -C CHANGELOG.md -p.- Review the

CHANGELOG.mdfile and ensure all relevant changes since the last tag are included. - Create a new release in Sentry:

VERSION={release-version-here} bash scripts/sentry-release.sh. If you’re doing this for the first time, checkout the tips below for troubleshooting sentry cli access. git commit -am "chore: tag <version>"to commit the new version and changesgit tag -s -m "chore: tag <version>" <version>to create a signed tag of the current HEAD commit for release.git push origin release/<version>to push the commits to a new origin release branchgit push --tags origin release/<version>to push the tags to the release branch.- Submit a Pull Request (PR) on github to merge the release branch to master.

- Go to the GitHub release, you should see the new tag with no release information.

- Click the

Draft a new releasebutton. - Enter the <version> number for

Tag version. - Copy and paste the most recent change set from

CHANGELOG.mdinto the release description, omitting the top 2 lines (the name and version) - Once your PR merges, click [Publish Release] on the GitHub release page.

Sync server is automatically deployed to STAGE, however QA may need to be notified if testing is required. Once QA signs off, then a bug should be filed to promote the server to PRODUCTION.

Troubleshooting

-

rm Cargo.lock; cargo clean;- Try this if you’re having problems compiling. -

Some versions of OpenSSL 1.1.1 can conflict with grpcio’s built in BoringSSL. These errors can cause syncstorage to fail to run or compile. If you see a problem related to

libsslyou may need to specify thecargooption--features grpcio/opensslto force grpcio to use OpenSSL.

Sentry

- If you’re having trouble working with Sentry to create releases, try authenticating using their self hosted server option that’s outlined here Ie,

sentry-cli --url https://selfhosted.url.com/ login. It’s also recommended to create a.sentryclircconfig file. See this example for the config values you’ll need.

Related Documentation

Configuration

Rust uses environment variables for a number of configuration options. Some of these include:

| variable | value | description |

|---|---|---|

| RUST_LOG | debug, info, warn, error | minimum Rust error logging level |

| RUST_TEST_THREADS | 1 | maximum number of concurrent threads for testing. |

In addition, Sync server configuration options can either be specified as environment variables (prefixed with SYNC_*) or in a configuration file using the --config option.

For example the following are equivalent:

$ SYNC_HOST=0.0.0.0 SYNC_MASTER_SECRET="SuperSikkr3t" SYNC_SYNCSTORAGE__DATABASE_URL=mysql://scott:tiger@localhost/syncstorage cargo run

$ cat syncstorage.local.toml

host = "0.0.0.0"

master_secret = "SuperSikkr3t"

[syncstorage]

database_url = "mysql://scott:tiger@localhost/syncstorage"

$ cargo run -- --config syncstorage.local.toml

Options can be mixed between environment variables and configuration. Environment variables have higher precedence.

Options

The following configuration options are available.

Server Settings

| Env Var | Default Value | Description |

|---|---|---|

| SYNC_HOST | 127.0.0.1 | Host address to bind the server to |

| SYNC_PORT | 8000 | Server port to bind to |

| SYNC_MASTER_SECRET | None, required | Secret used to derive auth secrets |

| SYNC_ENVIRONMENT | dev | Environment name (“dev”, “stage”, “prod”) |

| SYNC_HUMAN_LOGS | false | Enable human-readable logs |

| SYNC_ACTIX_KEEP_ALIVE | None | HTTP keep-alive header value in seconds |

| SYNC_WORKER_MAX_BLOCKING_THREADS | 512 | The maximum number of blocking threads in the worker threadpool. This threadpool is used by Actix-web to handle blocking operations. |

CORS

| Env Var | Default Value | Description |

|---|---|---|

| SYNC_CORS_ALLOWED_ORIGIN | * | Allowed origins for CORS requests |

| SYNC_CORS_MAX_AGE | 1728000 | CORS preflight cache seconds (20 days) |

| SYNC_CORS_ALLOWED_METHODS | [“DELETE”, “GET”, “POST”, “PUT”] | Allowed methods |

Syncstorage Database

| Env Var | Default Value | Description |

|---|---|---|

| SYNC_SYNCSTORAGE__DATABASE_URL | mysql://root@127.0.0.1/syncstorage | Database connection URL |

| SYNC_SYNCSTORAGE__DATABASE_POOL_MAX_SIZE | 10 | Max database connections |

| SYNC_SYNCSTORAGE__DATABASE_POOL_CONNECTION_TIMEOUT | 30 | Pool timeout in seconds |

| SYNC_SYNCSTORAGE__DATABASE_POOL_CONNECTION_LIFESPAN | None | Max connection age in seconds |

| SYNC_SYNCSTORAGE__DATABASE_POOL_CONNECTION_MAX_IDLE | None | Max idle time in seconds |

| SYNC_SYNCSTORAGE__DATABASE_POOL_SWEEPER_TASK_INTERVAL | 30 | How often, in seconds, a background task runs to evict idle database connections (Spanner only) |

| SYNC_SYNCSTORAGE__DATABASE_SPANNER_ROUTE_TO_LEADER | false | Send leader-aware headers to Spanner |

| SYNC_SYNCSTORAGE__SPANNER_EMULATOR_HOST | None | Spanner emulator host (e.g., localhost:9010) |

Syncstorage Limits

| Env Var | Default Value | Description |

|---|---|---|

| SYNC_SYNCSTORAGE__LIMITS__MAX_POST_BYTES | 2,621,440 | Max BSO payload size per request |

| SYNC_SYNCSTORAGE__LIMITS__MAX_POST_RECORDS | 100 | Max BSO count per request |

| SYNC_SYNCSTORAGE__LIMITS__MAX_RECORD_PAYLOAD_BYTES | 2,621,440 | Max individual BSO payload size |

| SYNC_SYNCSTORAGE__LIMITS__MAX_REQUEST_BYTES | 2,625,536 | Max Content-Length for requests |

| SYNC_SYNCSTORAGE__LIMITS__MAX_TOTAL_BYTES | 262,144,000 | Max BSO payload size per batch |

| SYNC_SYNCSTORAGE__LIMITS__MAX_TOTAL_RECORDS | 10,000 | Max BSO count per batch |

| SYNC_SYNCSTORAGE__LIMITS__MAX_QUOTA_LIMIT | 2,147,483,648 | Max storage quota per user (2 GB) |

Syncstorage Features

| Env Var | Default Value | Description |

|---|---|---|

| SYNC_SYNCSTORAGE__ENABLED | true | Enable syncstorage service |

| SYNC_SYNCSTORAGE__ENABLE_QUOTA | false | Enable quota tracking (Spanner only) |

| SYNC_SYNCSTORAGE__ENFORCE_QUOTA | false | Enforce quota limits (Spanner only) |

| SYNC_SYNCSTORAGE__GLEAN_ENABLED | true | Enable Glean telemetry |

| SYNC_SYNCSTORAGE__STATSD_LABEL | syncstorage | StatsD metrics label prefix |

Tokenserver Database

| Env Var | Default Value | Description |

|---|---|---|

| SYNC_TOKENSERVER__DATABASE_URL | mysql://root@127.0.0.1/tokenserver | Tokenserver database URL |

| SYNC_TOKENSERVER__DATABASE_POOL_MAX_SIZE | 10 | Max tokenserver DB connections |

| SYNC_TOKENSERVER__DATABASE_POOL_CONNECTION_TIMEOUT | 30 | Pool timeout in seconds |

Tokenserver Features

| Env Var | Default Value | Description |

|---|---|---|

| SYNC_TOKENSERVER__ENABLED | false | Enable tokenserver service |

| SYNC_TOKENSERVER__RUN_MIGRATIONS | false | Run DB migrations on startup |

| SYNC_TOKENSERVER__TOKEN_DURATION | 3600 | Token TTL (1 hour) |

Tokenserver+FxA Integration

| Env Var | Default Value | Description |

|---|---|---|

| SYNC_TOKENSERVER__FXA_EMAIL_DOMAIN | api-accounts.stage.mozaws.net | FxA email domain |

| SYNC_TOKENSERVER__FXA_OAUTH_SERVER_URL | https://oauth.stage.mozaws.net | FxA OAuth server URL |

| SYNC_TOKENSERVER__FXA_OAUTH_REQUEST_TIMEOUT | 10 | OAuth request timeout in seconds |

| SYNC_TOKENSERVER__FXA_METRICS_HASH_SECRET | secret | Secret for hashing metrics to maintain anonymity |

| SYNC_TOKENSERVER__FXA_OAUTH_PRIMARY_JWK__KTY | None | Primary JWK key type (e.g., “RSA”) |

| SYNC_TOKENSERVER__FXA_OAUTH_PRIMARY_JWK__ALG | None | Primary JWK algorithm (e.g., “RS256”) |

| SYNC_TOKENSERVER__FXA_OAUTH_PRIMARY_JWK__KID | None | Primary JWK key ID |

| SYNC_TOKENSERVER__FXA_OAUTH_PRIMARY_JWK__FXA_CREATED_AT | None | Primary JWK creation timestamp |

| SYNC_TOKENSERVER__FXA_OAUTH_PRIMARY_JWK__USE | None | Primary JWK use (e.g., “sig”) |

| SYNC_TOKENSERVER__FXA_OAUTH_PRIMARY_JWK__N | None | Primary JWK modulus (RSA public key component) |

| SYNC_TOKENSERVER__FXA_OAUTH_PRIMARY_JWK__E | None | Primary JWK exponent (RSA public key component) |

| SYNC_TOKENSERVER__FXA_OAUTH_SECONDARY_JWK__* | None | Secondary JWK (same structure as primary) |

StatsD Metrics

| Env Var | Default Value | Description |

|---|---|---|

| SYNC_STATSD_HOST | localhost | StatsD server hostname |

| SYNC_STATSD_PORT | 8125 | StatsD server port |

| SYNC_INCLUDE_HOSTNAME_TAG | false | Include hostname in metrics tags |

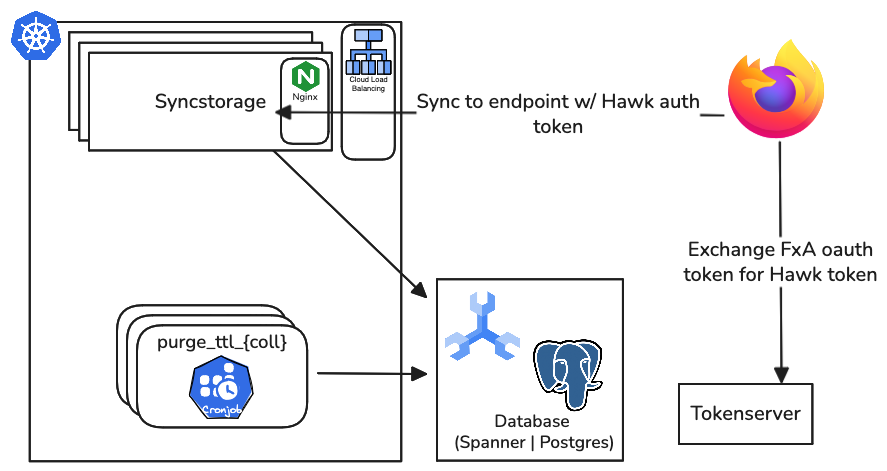

Architecture

A high-level architectural overview of the Sync Service which includes Sync and Tokenserver.

Syncstorage

Below is an illustration of a highly-simplified Sync flow:

graph LR

SignIn["Sign in to FxA"]

FxA[("FxA")]

OAuth["Sync client gets OAuth token"]

PresentToken["OAuth Token presented to Tokenserver"]

Tokenserver[("Tokenserver")]

AssignNode["Tokenserver assigns storage node"]

InfoCollections["info/collections: Do we even need to sync?"]

MetaGlobal["meta/global: Do we need to start over?"]

CryptoKeys["crypto/keys: Get keys"]

GetStorage["GET storage/<collection>: Fetch new data"]

PostStorage["POST storage/<collection>: Upload new data"]

%% Main flow

SignIn --> FxA

FxA --> OAuth

OAuth --> PresentToken

PresentToken --> Tokenserver

Tokenserver --> AssignNode

AssignNode --> InfoCollections

%% Decision / metadata path

InfoCollections --> MetaGlobal

MetaGlobal --> CryptoKeys

%% Sync operations

CryptoKeys --> GetStorage

CryptoKeys --> PostStorage

Storage-Client Relationship

This high-level diagram illustrates the standard Sync collections and their relationships.

graph TD

%% ===== Storage =====

DB[("DB")]

BookmarksMirror[("Bookmarks Mirror")]

LoginStorage[("Login Manager Storage")]

AutofillStorage[("Form Autofill Storage")]

XPIDB[("XPI Database")]

CredentialStorage[("Credential Storage")]

%% ===== Client components =====

Places["Places"]

LoginManager["Login Manager"]

TabbedBrowser["Tabbed Browser"]

AddonManager["Add-on Manager"]

ExtensionBridge["Extension Storage Bridge"]

%% ===== Sync engines =====

Bookmarks["Bookmarks"]

History["History"]

Passwords["Passwords"]

CreditCards["Credit cards"]

Addresses["Addresses"]

OpenTabs["Open tabs"]

Addons["Add-ons"]

Clients["Clients"]

%% ===== Sync internals =====

subgraph Sync["Sync"]

HTTPClient["HTTP Client"]

TokenClient["Tokenserver Client"]

end

%% ===== Storage =====

SyncStorage[("Sync Storage Server")]

TokenServer[("Tokenserver")]

PushService["Push Service"]

subgraph FirefoxAccounts["Firefox Accounts Service"]

PushIntegration["Push Integration"]

FxAHTTP["HTTP Clients"]

end

subgraph Accounts

MozillaPush[("Mozilla Push Server")]

FxAAuth[("FxA Auth Server")]

FxAOAuth[("FxA OAuth Server")]

FxAProfile[("FxA Profile Server")]

end

%% ===== Relationships =====

DB --> Places

BookmarksMirror --> Places

Places --> Bookmarks

Places --> History

LoginStorage <--> LoginManager

AutofillStorage --> CreditCards

AutofillStorage --> Addresses

TabbedBrowser --> OpenTabs

AddonManager --> Addons

XPIDB --> AddonManager

ExtensionBridge --> Clients

%% ===== Sync engine / Collections =====

Bookmarks --> Sync

History --> Sync

Passwords --> Sync

CreditCards --> Sync

Addresses --> Sync

OpenTabs --> Sync

Addons --> Sync

Clients --> Sync

HTTPClient --> Sync

TokenClient <--> TokenServer

SyncStorage <--> HTTPClient

%% ===== Push & Accounts =====

FirefoxAccounts --> PushIntegration

FirefoxAccounts --> FxAHTTP

FxAAuth <--> MozillaPush

PushIntegration --> PushService

FxAHTTP --> FxAAuth

FxAHTTP --> FxAOAuth

FxAHTTP --> FxAProfile

CredentialStorage --> FirefoxAccounts

Tokenserver

The intent of this file is inspired by a very sensible blog post many developers are familiar with regarding the necessity to illustrate systems with clarity. Given Sync’s complexity and interrelationships with other architectures, this

Open API Documentation

OpenAPI / Swagger UI

This project uses utoipa and utoipa-swagger-ui to provide interactive API documentation.

Accessing and Working With the Documentation

It is suggested to use the stage instance of Sync and/or Tokenserver when playing with the API, though you may also interact with your data in the production instance.

Please take care to select the URL for Tokenserver for Tokenserver requests and the Syncstorage URL for Syncstorage requests.

The Prod and Stage environments below will be available as a drop-down in the SwaggerUI:

- Sync Stage:

https://sync-us-west1-g.sync.services.allizom.org. - Sync Prod:

https://sync-1-us-west1-g.sync.services.mozilla.com. - Tokenserver Stage:

https://stage-tokenserver.sync.nonprod.webservices.mozgcp.net. - Tokenserver Prod:

https://prod-tokenserver.sync.prod.webservices.mozgcp.net.

On GitHub Pages (Static Documentation):

The project automatically publishes API documentation to GitHub Pages:

- Main Documentation: https://mozilla-services.github.io/syncstorage-rs/

- Rust API Docs (cargo doc): https://mozilla-services.github.io/syncstorage-rs/api/

- OpenAPI/Swagger UI: https://mozilla-services.github.io/syncstorage-rs/swagger-ui/

When the service is running (live deployment):

URLs for Swagger and OpenAPI Spec:

- Swagger UI (Interactive):

https://<your-deployment-url>/swagger-ui/ - OpenAPI Spec (JSON):

https://<your-deployment-url>/api-doc/openapi.json

Replace <your-deployment-url> with:

- Production/Stage: [Add your prod/stage URL here]

- Local Development:

http://localhost:8000(or your configured port)

API Endpoints

The API is organized into three main categories:

Syncstorage Endpoints

Endpoints for Firefox Sync data storage operations:

GET /1.5/{uid}/info/collections- Get collection timestampsGET /1.5/{uid}/info/collection_counts- Get collection countsGET /1.5/{uid}/info/collection_usage- Get collection usageGET /1.5/{uid}/info/configuration- Get server configurationGET /1.5/{uid}/info/quota- Get quota informationDELETE /1.5/{uid}/storage- Delete all user dataGET /1.5/{uid}/storage/{collection}- Get BSOs from a collectionPOST /1.5/{uid}/storage/{collection}- Add or update BSOsDELETE /1.5/{uid}/storage/{collection}- Delete a collection or BSOsGET /1.5/{uid}/storage/{collection}/{bso}- Get a specific BSOPUT /1.5/{uid}/storage/{collection}/{bso}- Create or update a BSODELETE /1.5/{uid}/storage/{collection}/{bso}- Delete a specific BSO

Tokenserver Endpoints

Endpoints for Sync node allocation and authentication:

GET /1.0/{application}/{version}- Get sync tokenGET /__heartbeat__- Tokenserver health check

Dockerflow Endpoints

Service health and monitoring endpoints:

GET /__heartbeat__- Service health checkGET /__lbheartbeat__- Load balancer health checkGET /__version__- Service version information

Exploring the Sync API

To aid in exploring your own Sync API with Swagger, you may want to acquire your UID and other details about your Sync account. The easiest way to do so is to use the About Sync Extension. Note that this extension only works on Desktop.

Firefox Extensions Page for About Sync GitHub Repository for About Sync

Maintenance

When adding new endpoints:

- Add

#[utoipa::path(...)]annotation to the handler function. - Add the handler path to

ApiDocinsyncserver/src/server/mod.rs - If using custom types, derive

ToSchemaon request/response structs. - Run

cargo run --example generate_openapi_specto verify the spec generates correctly. Follow instructions below.

Generating the OpenAPI Spec Locally

If you don’t want to compile the Sync server on your machine to view the API docs, follow these instructions:

Use make api-prev

We created a handy Makefile command called make api-prev that automatically generates the specification file, runs Swagger in Docker and opens your browser to localhost:8080. See the steps below to understand this process. Note this attempts to be platform agnostic, but might require some adaptation depending on your operating system.

Commands to generate the OpenAPI specification without running the server:

# Generate the spec to stdout

cargo run --example generate_openapi_spec

# Save to a file

cargo run --example generate_openapi_spec > openapi.json

Other options:

-

Use Docker (simplest - used in

make api-prev): This option requires you to have runcargo run --example generate_openapi_spec > openapi.json.docker run -p 8080:8080 -e SWAGGER_JSON=/openapi.json -v $(pwd)/openapi.json:/openapi.json swaggerapi/swagger-uiThen open http://localhost:8080

-

Use online Swagger Editor:

- Go to https://editor.swagger.io/

- Copy the contents of

openapi.json - Paste into the editor

- View the interactive documentation

-

Use VS Code extension:

- Install “OpenAPI (Swagger) Editor” extension

- Open

openapi.jsonin VS Code - Click “Preview Swagger” to view interactive docs

Publishing to GitHub Pages

The .github/workflows/publish-docs.yaml workflow automatically publishes these docs:

- Generates the OpenAPI spec using the

generate_openapi_specexample file. - Downloads Swagger UI from the official GitHub releases.

- Replaces the default example Swagger API with your Sync API:

- The default Swagger UI comes configured to display a demo “Pet Store” API

- We use

sedto replacehttps://petstore.swagger.io/v2/swagger.jsonwith ouropenapi.json

- Deploys everything to GitHub Pages at:

- https://mozilla-services.github.io/syncstorage-rs/swagger-ui/

The workflow runs in parallel:

build-mdbookjob: Builds mdBook docs + Rust cargo docsbuild-openapijob: Generates OpenAPI spec + sets up Swagger UIcombine-and-preparejob: Combines both outputsdeployjob: Deploys to GitHub Pages

Syncstorage API

The following is comprehensive API documentation.

Legacy API docs are stored here for reference.

SyncStorage API v1.5

The SyncStorage API defines a HTTP web service used to store and retrieve simple objects called Basic Storage Objects (BSOs), which are organized into named collections.

Concepts

Basic Storage Object

A Basic Storage Object (BSO) is the generic JSON wrapper around all items passed into and out of the SyncStorage server. Like all JSON documents, BSOs are composed of unicode character data rather than raw bytes and must be encoded for transmission over the network. The SyncStorage service always encodes BSOs in UTF8.

Basic Storage Objects have the following fields:

| Parameter | Default | Type/Max | Description |

|---|---|---|---|

id | required | string (64) | An identifying string. For a user, the id must be unique for a BSO within a collection, though objects in different collections may have the same ID. BSO ids must only contain printable ASCII characters. They should be exactly 12 base64-urlsafe characters; while this isn’t enforced by the server, the Firefox client expects it in most cases. |

modified | none | float (2 decimals) | The timestamp at which this object was last modified, in seconds since UNIX epoch (1970-01-01 00:00:00 UTC). Set automatically by the server according to its own clock; any client-supplied value is ignored. |

sortindex | none | integer (9 digits) | An integer indicating the relative importance of this item in the collection. |

payload | empty string | string (at least 256KiB) | A string containing the data of the record. The structure of this string is defined separately for each BSO type. This spec makes no requirements for its format; JSONObjects are common in practice. Servers must support payloads up to 256KiB. They may accept larger payloads and advertise their maximum payload size via dynamic configuration. |

ttl | none | integer (positive, 9 digits) | The number of seconds to keep this record. After that time this item will no longer be returned in response to any request, and it may be pruned from the database. If not specified or null, the record will not expire. This field may be set on write, but is not returned by the server. |

Example:

{

"id": "-F_Szdjg3GzX",

"modified": 1388635807.41,

"sortindex": 140,

"payload": "{ \"this is\": \"an example\" }"

}

Collections

Each BSO is assigned to a collection with other related BSOs. Collection names may be up to 32 characters long, and must contain only characters from the urlsafe-base64 alphabet (alphanumeric characters, underscore and hyphen) and the period.

Collections are created implicitly when a BSO is stored in them for the first time. They continue to exist until explicitly deleted, even if they no longer contain any BSOs.

The default collections used by Firefox to store sync data are:

- bookmarks

- history

- forms

- prefs

- tabs

- passwords

The following additional collections are used for internal management purposes by the storage client:

- clients

- crypto

- keys

- meta

Timestamps

In order to allow multiple clients to coordinate their changes, the SyncStorage server associates a last-modified time with the data stored for each user. This is a server-assigned decimal value, precise to two decimal places, that is updated from the server’s clock with every modification made to the user’s data.

The last-modified time is tracked at three levels of nesting:

- The store as a whole has a last-modified time that is updated whenever any change is made to the user’s data.

- Each collection has a last-modified time that is updated whenever an item in that collection is modified or deleted. It will always be less than or equal to the overall last-modified time.

- Each BSO has a last-modified time that is updated whenever that specific item is modified. It will always be less than or equal to the last-modified time of the containing collection.

The last-modified time is guaranteed to be monotonically increasing and can be used for coordination and conflict management as described in Syncstorage Concurrency.

Note that the last-modified time of a collection may be larger than that of any item within it. For example, if all items are deleted from the collection, its last-modified time will be the timestamp of the last deletion.

API Instructions

The SyncStorage data for a given user may be accessed via authenticated HTTP requests to their SyncStorage API endpoint. Request and response bodies are all UTF8-encoded JSON unless otherwise specified. All requests are to URLs of the form:

https://<endpoint-url>/<api-instruction>

The user’s SyncStorage endpoint URL can be obtained via the tokenserver

authentication flow. All requests must be signed using HAWK Authentication

credentials obtained from the tokenserver.

Error responses generated by the SyncStorage server will, wherever possible,

conform to the respcodes defined for the User API. The format of a successful

response is defined in the appropriate section below.

General Info

APIs in this section provide high-level interactions with the user’s data store as a whole.

GET https://<endpoint-url>/info/collections

Returns an object mapping collection names associated with the account to the last-modified time for each collection.

The server may allow requests to this endpoint to be authenticated with an expired token, so that clients can check for server-side changes before fetching an updated token from the tokenserver.

GET https://<endpoint-url>/info/quota

Returns a two-item list giving the user’s current usage and quota (in KB). The second item will be null if the server does not enforce quotas.

Note that usage numbers may be approximate.

GET https://<endpoint-url>/info/collection_usage

Returns an object mapping collection names associated with the account to the data volume used for each collection (in KB).

Note that this request may be very expensive as it calculates more detailed and

accurate usage information than the request to /info/quota.

GET https://<endpoint-url>/info/collection_counts

Returns an object mapping collection names associated with the account to the total number of items in each collection.

GET https://<endpoint-url>/info/configuration

Provides information about the configuration of this storage server with respect to various protocol and size limits. Returns an object mapping configuration item names to their values as enforced by this server. The following configuration items may be present:

- max_request_bytes: maximum size in bytes of the overall HTTP request body.

- max_post_records: maximum number of records in a single POST.

- max_post_bytes: maximum combined payload size in bytes for a single POST.

- max_total_records: maximum total number of records in a batched upload.

- max_total_bytes: maximum total combined payload size in a batched upload.

- max_record_payload_bytes: maximum size of an individual BSO payload, in bytes.

DELETE https://<endpoint-url>/storage

Deletes all records for the user. This URL is provided for backwards

compatibility; new clients should use DELETE https://<endpoint-url>.

DELETE https://<endpoint-url>

Deletes all records for the user.

Individual Collection Interaction

APIs in this section provide a mechanism for interacting with a single collection.

GET https://<endpoint-url>/storage/<collection>

Returns a list of the BSOs contained in a collection. For example:

["GXS58IDC_12", "GXS58IDC_13", "GXS58IDC_15"]

By default only the BSO ids are returned, but full objects can be requested using the full parameter. If the collection does not exist, an empty list is returned.

Optional query parameters:

- ids: comma-separated list of ids; only those ids will be returned (max 100).

- newer: timestamp; return only items with modified time strictly greater than this.

- older: timestamp; return only items with modified time strictly smaller than this.

- full: any value; return full BSO objects rather than ids.

- limit: positive integer; return at most this many objects. If more match, returns

X-Weave-Next-Offset. - offset: string token from a previous

X-Weave-Next-Offset. - sort: ordering:

newest— orders by last-modified time, largest firstoldest— orders by last-modified time, smallest firstindex— orders by sortindex, highest weight first

The response may include an X-Weave-Records header indicating the total number

of records, if the server can efficiently provide it.

If limit is provided and more items match, the response will include an

X-Weave-Next-Offset header. Pass that value back as offset to fetch more

items. See syncstorage_paging for an example.

Output formats for multi-record GET requests are selected by Accept header and

prioritized in this order:

- application/json: JSON list of records (ids or full objects).

- application/newlines: each record followed by a newline (id or full object).

Potential HTTP error responses include:

- 400 Bad Request: too many ids were included in the query parameter.

GET https://<endpoint-url>/storage/<collection>/<id>

Returns the BSO in the collection corresponding to the requested id.

PUT https://<endpoint-url>/storage/<collection>/<id>

Creates or updates a specific BSO within a collection. The request body must be a JSON object containing new data for the BSO.

If the target BSO already exists it will be updated with the data from the

request body. Fields not provided will not be overwritten, so it is possible to

update ttl without re-submitting payload. Fields explicitly set to null

will be set to their default value by the server.

If the target BSO does not exist, then fields not provided in the request body will be set to their default value by the server.

This request may include the X-If-Unmodified-Since header to avoid overwriting

data if it has changed since the client fetched it.

Successful responses return the new last-modified time for the collection.

Potential HTTP error responses include:

- 400 Bad Request: user has exceeded their storage quota.

- 413 Request Entity Too Large: the object is larger than the server will store.

POST https://<endpoint-url>/storage/<collection>

Takes a list of BSOs in the request body and iterates over them, effectively doing a series of individual PUTs with the same timestamp.

Each BSO must include an id field. The corresponding BSO will be created or

updated according to the semantics of a PUT request targeting that record; in

particular, fields not provided will not be overwritten on BSOs that already

exist.

Input formats for multi-record POST requests are selected by Content-Type:

- application/json: JSON list of BSO objects.

- application/newlines: each BSO is a JSON object followed by a newline.

For backwards-compatibility, text/plain is also treated as JSON.

Servers may impose limits on request size and/or the number of BSOs per request. The default limit is 100 BSOs per request.

Successful responses contain a JSON object with:

- modified: new last-modified time for updated items.

- success: list of ids successfully stored.

- failed: object mapping ids to a string describing the failure.

For example:

{

"modified": 1233702554.25,

"success": ["GXS58IDC_12", "GXS58IDC_13", "GXS58IDC_15",

"GXS58IDC_16", "GXS58IDC_18", "GXS58IDC_19"],

"failed": {"GXS58IDC_11": "invalid ttl",

"GXS58IDC_14": "invalid sortindex"}

}

Posted BSOs whose ids do not appear in either success or failed should be

treated as failed for an unspecified reason.

Batch uploads

To allow upload of large numbers of items while ensuring that other clients do not sync down inconsistent data, servers may support combining several POST requests into a single “batch” so that all modified BSOs appear to have been submitted at the same time. Batching is controlled via query parameters:

- batch:

- to begin a new batch: pass the string

true - to add to an existing batch: pass a previously-obtained batch identifier

- ignored by servers that do not support batching

- to begin a new batch: pass the string

- commit:

- if present, must be

true - the batch parameter must also be specified

- if present, must be

When submitting items for a multi-request batch upload, successful responses will have status 202 Accepted and will include a JSON object containing the batch identifier along with per-item status, e.g.:

{

"batch": "OPAQUEBATCHID",

"success": ["GXS58IDC_12", "GXS58IDC_13", "GXS58IDC_15",

"GXS58IDC_16", "GXS58IDC_18", "GXS58IDC_19"],

"failed": {"GXS58IDC_11": "invalid ttl",

"GXS58IDC_14": "invalid sortindex"}

}

The returned batch value can be passed back in the batch query parameter to

add more items. Items in success are guaranteed to become available if and

when the batch is successfully committed.

The value of batch may not be safe to include directly in a URL; it must be

URL-encoded first (e.g., JavaScript encodeURIComponent, Python urllib.quote,

or equivalent).

If the server does not support batching, it will ignore batch and return 200 OK

without a batch identifier.

The response when committing a batch is identical to a non-batched request.

Semantics of batch=true&commit=true (start and commit immediately) are identical

to a non-batched request.

Servers may impose limits on total payload size and/or number of BSOs in a batch.

If exceeded, the server returns 400 Bad Request with response code 17.

Where possible, clients should use the X-Weave-Total-Records and

X-Weave-Total-Bytes headers to signal expected total upload size so oversized

batches can be rejected before upload.

Potential HTTP error responses include:

- 400 Bad Request, response code 14: user has exceeded storage quota.

- 400 Bad Request, response code 17: server size or item-count limit exceeded.

- 413 Request Entity Too Large: request contains more data than server will process.

DELETE https://<endpoint-url>/storage/<collection>

Deletes an entire collection.

After executing this request, the collection will not appear in GET /info/collections

and calls to GET /storage/<collection> will return an empty list.

DELETE https://<endpoint-url>/storage/<collection>?ids=<ids>

Deletes multiple BSOs from a collection with a single request.

Selection parameter:

- ids: comma-separated list of ids to delete (max 100).

The collection itself still exists after this request. Even if all BSOs are deleted,

it will receive an updated last-modified time, appear in GET /info/collections,

and be readable via GET /storage/<collection>.

Successful responses include a JSON body with "modified" giving the new last-modified

time for the collection.

Potential HTTP error responses include:

- 400 Bad Request: too many ids were included in the query parameter.

DELETE https://<endpoint-url>/storage/<collection>/<id>

Deletes the BSO at the given location.

Request Headers

X-If-Modified-Since

May be added to any GET request as a decimal timestamp. If last-modified time of the

resource is less than or equal to the given value, returns 304 Not Modified.

Similar to HTTP If-Modified-Since, but uses a decimal timestamp rather than an HTTP date.

If the value is not a valid positive decimal, or if X-If-Unmodified-Since is also present,

returns 400 Bad Request.

X-If-Unmodified-Since

May be added to any request to a collection or item as a decimal timestamp. If last-modified

time of the resource is greater than the given value, request fails with 412 Precondition Failed.

Similar to HTTP If-Unmodified-Since, but uses a decimal timestamp rather than an HTTP date.

If the value is not a valid positive decimal, or if X-If-Modified-Since is also present,

returns 400 Bad Request.

X-Weave-Records

May be sent with multi-record uploads to indicate total number of records included.

If server would not accept that many, returns 400 Bad Request with response code 17.

X-Weave-Bytes

May be sent with multi-record uploads to indicate combined payload size in bytes.

If server would not accept that many bytes, returns 400 Bad Request with response code 17.

X-Weave-Total-Records

May be included with a POST request using batch to indicate total number of records in the batch.

If server would not accept, returns 400 Bad Request with response code 17.

If value is not a valid positive integer, or request is not operating on a batch, returns

400 Bad Request with response code 1.

X-Weave-Total-Bytes

May be included with a POST request using batch to indicate total payload size in bytes for the batch.

If server would not accept, returns 400 Bad Request with response code 17.

If value is not a valid positive integer, or request is not operating on a batch, returns

400 Bad Request with response code 1.

Response Headers

### Retry-After

- With HTTP

503: server is undergoing maintenance; client should not attempt further requests for the specified seconds. - With HTTP

409: indicates time after which conflicting edits are expected to complete; clients should wait at least this long before retrying.

X-Weave-Backoff

Indicates server is under heavy load but still capable of servicing requests.

Unlike Retry-After, it may be included with any response including 200 OK.

Clients should do the minimum additional requests required to maintain consistency, then stop for the specified seconds.

X-Last-Modified

Last-modified time of the target resource during processing. Included in all success responses

(200, 201, 204). Similar to HTTP Last-Modified but uses a decimal timestamp.

For write requests, equals server current time and new last-modified time of created/changed BSOs.

X-Weave-Timestamp

Returned with all responses, indicating current server timestamp. Similar to HTTP Date but uses

seconds since epoch with two decimal places.

For write requests: equals new last-modified time of created/changed BSOs (same as X-Last-Modified).

For successful read requests: is >= both X-Last-Modified and the modified timestamp of any returned BSOs.

Clients must not use X-Weave-Timestamp for coordination/conflict management; use last-modified timestamps

as described in syncstorage_concurrency.

X-Weave-Records

May be returned with multi-record responses indicating total number of records in the response.

X-Weave-Next-Offset

May be returned with multi-record responses when limit was provided and more records are available.

Value can be passed back as offset to retrieve additional records.

Always a string from the urlsafe-base64 alphabet; clients must treat it as opaque.

X-Weave-Quota-Remaining

May be returned in response to write requests indicating remaining storage space (KB). Not returned if quotas are disabled.

X-Weave-Alert

May be returned in response to any request and contains warning/informational alerts.

If first character is not {, it is a human-readable string.

If first character is {, it is a JSON object signalling impending shutdown and contains:

- code:

"soft-eol"or"hard-eol" - message: human-readable message

- url: URL for more information

HTTP Status Codes

Since the protocol is implemented on HTTP, clients should handle any valid HTTP response. This section highlights the explicit protocol response codes.

200 OK

Request processed successfully; response body contains useful information.

304 Not Modified

For requests with X-If-Modified-Since, indicates resource has not been modified; client should use local copy.

400 Bad Request

Request or supplied data is invalid and cannot be processed. Returned for malformed headers or unparsable JSON.

If Content-Type is application/json, the body will be an integer response code as documented in respcodes.

Codes of particular meaning include:

6: JSON parse failure8: invalid BSO13: invalid collection (invalid chars in collection name)14: user exceeded storage quota16: client known to be incompatible with server17: server limit exceeded (too many items or too large payload)

401 Unauthorized

Authentication credentials are invalid on this node (node reassignment or expired/invalid auth token). Client should check with tokenserver whether endpoint URL has changed; if so, abort and retry against new endpoint.

404 Not Found

Resource not found. May be returned for GET/DELETE on non-existent items. Non-existent collections do not trigger 404 for backwards-compatibility reasons.

405 Method Not Allowed

URL does not support the request method (e.g., PUT to /info/quota).

409 Conflict

Write request (PUT, POST, DELETE) rejected due to conflicting changes by another client. Client should retry after accounting for changes from other clients.

May include Retry-After indicating when conflicting edits are expected to complete.

412 Precondition Failed

For requests with X-If-Unmodified-Since, indicates resource has been modified more recently than the given time.

Write is not performed.

413 Request Entity Too Large

Write request body (PUT, POST) larger than server will accept. For multi-record POST, retry with smaller batches.

415 Unsupported Media Type

Content-Type for PUT/POST specifies an unsupported data format.

503 Service Unavailable

Server undergoing maintenance. Includes Retry-After. Client should not attempt another sync for the specified seconds.

Response body may contain a JSON string describing status/error.

513 Service Decommissioned

Service has been decommissioned. Includes X-Weave-Alert header with a JSON object:

- code:

"hard-eol" - message: human-readable message

- url: URL for more info

Client should display message to user and cease further attempts to use the service.

Concurrency and Conflict Management

The SyncStorage service allows multiple clients to synchronize data via a shared server

without requiring inter-client coordination or blocking. To achieve proper synchronization

without skipping or overwriting data, clients are expected to use timestamp-driven coordination

features such as X-Last-Modified and X-If-Unmodified-Since.

The server guarantees a strictly consistent and monotonically-increasing timestamp across the

user’s stored data. Any request that alters the contents of a collection will cause the

last-modified time to increase. Any BSOs added or modified by such a request will have their

modified field set to the updated timestamp.

Conceptually, each write request performs the following operations as an atomic unit:

- Read current time

Tand check it is greater than overall last-modified time; if not return 409 Conflict. - Create new BSOs as specified, setting their

modifiedtoT. - Modify existing BSOs as specified, setting their

modifiedtoT. - Delete specified BSOs.

- Set the collection last-modified time to

T. - Set the overall last-modified time for the user’s data to

T. - Generate 200 OK with

X-Last-ModifiedandX-Weave-Timestampset toT.

Writes from different clients may be processed concurrently but appear sequential and atomic to clients.

To avoid retransmitting unchanged data, clients should set X-If-Modified-Since and/or the newer parameter to the

last known value of X-Last-Modified on the target resource.

To avoid overwriting changes, clients should set X-If-Unmodified-Since to the last known value of X-Last-Modified

on the target resource.

Examples

Example: polling for changes to a BSO

Use GET /storage/<collection>/<id> with X-If-Modified-Since set to the last known X-Last-Modified:

last_modified = 0

while True:

headers = {"X-If-Modified-Since": last_modified}

r = server.get("/collection/id", headers)

if r.status != 304:

print " MODIFIED ITEM: ", r.json_body

last_modified = r.headers["X-Last-Modified"]

Example: polling for changes to a collection

Use GET /storage/<collection> with newer set to last known X-Last-Modified:

last_modified = 0

while True:

r = server.get("/collection?newer=" + last_modified)

for item in r.json_body["items"]:

print "MODIFIED ITEM: ", item

last_modified = r.headers["X-Last-Modified"]

Example: safely updating items in a collection

Use POST /storage/<collection> with X-If-Unmodified-Since:

r = server.get("/collection")

last_modified = r.headers["X-Last-Modified"]

bsos = generate_changes_to_the_collection()

headers = {"X-If-Unmodified-Since": last_modified}

r = server.post("/collection", bsos, headers)

if r.status == 412:

print "WRITE FAILED DUE TO CONCURRENT EDITS"

Client may abort or merge and retry with updated X-Last-Modified. Similar technique works for

PUT /storage/<collection>/<id>.

Example: creating a BSO only if it does not exist

Use X-If-Unmodified-Since: 0:

headers = {"X-If-Unmodified-Since": "0"}

r = server.put("/collection/item", data, headers)

if r.status == 412:

print "ITEM ALREADY EXISTS"

Example: paging through a large set of items

Use limit and offset, combining with X-If-Unmodified-Since to guard against concurrent changes:

r = server.get("/collection?limit=100")

print "GOT ITEMS: ", r.json_body["items"]

last_modified = r.headers["X-Last-Modified"]

next_offset = r.headers.get("X-Weave-Next-Offset")

while next_offset:

headers = {"X-If-Unmodified-Since": last_modified}

r = server.get("/collection?limit=100&offset=" + next_offset, headers)

if r.status == 412:

print "COLLECTION WAS MODIFIED WHILE READING ITEMS"

break

print "GOT ITEMS: ", r.json_body["items"]

next_offset = r.headers.get("X-Weave-Next-Offset")

Example: uploading a large batch of items

Combine multiple POSTs into a single batch with batch and commit, always using X-If-Unmodified-Since:

# Make an initial request to start a batch upload.

# It's possible to send some items here, but not required.

r = server.post("/collection?batch=true", [])

# Note that the batch id is opaque and cannot be safely put in a URL directly

batch_id = urllib.quote(r.json_body["batch"])

# Always use X-If-Unmodified-Since to detect conflicts.

last_modified = r.headers["X-Last-Modified"]

headers = {"X-If-Unmodified-Since": last_modified}

for items in split_items_into_smaller_batches():

# Send the items in several smaller batches.

r = server.post("/collection?batch=" + batch_id, items, headers)

if r.status == 412:

raise Exception("COLLECTION WAS MODIFIED WHILE UPLOADING ITEMS")

# The collection will not be modified yet.

assert r.headers['X-Last-Modified'] == last_modified

# Commit the batch once all items are uploaded.

# Again, it's possible to send some final items here, but not required.

r = server.post("/collection?commit=true&batch=" + batch_id, [], headers)

if r.status == 412:

raise Exception("COLLECTION WAS MODIFIED WHILE COMMITTING ITEMS")

# At this point all the uploaded items become visible,

# and the collection appears modified to other clients.

assert r.headers['X-Last-Modified'] > last_modified

Changes from v1.1

The following is a summary of protocol changes from Storage API v1.1 along with a justification for each change:

| What Changed | Why |

|---|---|

| Authentication is now performed using a BrowserID-based tokenserver flow and HAWK Access Authentication. | Supports authentication via Mozilla accounts and allows iteration of flow details without changing the sync protocol. |

| The structure of the endpoint URL is no longer specified, and should be considered an implementation detail. | Removes unnecessary coupling; clients do not need to configure endpoint components. Needed to support TokenServer-based auth. |

| The datatypes and defaults of BSO fields are more precisely specified. | Reflects current server behavior and is safer to specify explicitly. |

The BSO fields parentid and predecessorid have been removed along with related query parameters. | Deprecated in 1.1 and not in active use in current Firefox. |

The application/whoisi output format has been removed. | Not used in current Firefox. |

The previously-undocumented X-Weave-Quota-Remaining header has been documented. | It is used, so it should be documented. |

The X-Confirm-Delete header has been removed. | Sent unconditionally by existing client code and therefore useless; safely ignored by the server. |

The X-Weave-Alert header has grown additional semantics related to service end-of-life announcements. | Already implemented in Firefox; should be documented. |

GET /storage/<collection> no longer accepts index_above or index_below. | Not used in current Firefox; adds server requirements limiting operational flexibility. |

DELETE /storage/<collection> no longer accepts query parameters other than ids. | Not used in current Firefox; not all implemented correctly; adds server requirements limiting flexibility. |

POST /storage/<collection> now accepts application/newlines input in addition to application/json. | Matches application/newlines output; may enable streaming; existing client code need not change. |

The offset parameter is now an opaque server-generated value; clients must not create their own values. | Existing semantics hard to implement efficiently; enables more efficient pagination in future. |

The X-Last-Modified header has been added. | Different semantics from X-Weave-Timestamp; enables better conflict management; existing clients need not change. |

The X-If-Modified-Since header has been added and can be used on all GET requests. | Allows future clients to avoid redundant data transmission. |

The X-If-Unmodified-Since header can be used on some GET requests. | Allows future clients to detect changes during paginated fetches. |

| Server may reject concurrent writes with 409 Conflict. | Visible to existing clients but can be handled like 503; provides stronger consistency guarantees. |

| Batch uploads are supported across several POST requests. | Backwards-compatible extension for consistent uploads. |

Size limits can be read from a new /info/configuration endpoint. | Backwards-compatible extension for interoperability with configurable server behavior. |

Storage API v1.1 (Obsolete)

This document describes the legacy Sync Server Storage API, version 1.1. It has been superseded by Sync API v1.5.

The Storage server provides web services that can be used to store and retrieve Weave Basic Objects (WBOs) organized into collections.

Weave Basic Object

A Weave Basic Object (WBO) is the generic JSON wrapper around all items passed into and out of the storage server. Like all JSON, WBOs must be UTF-8 encoded. WBOs have the following fields:

| Parameter | Default | Type / Max | Description |

|---|---|---|---|

id | required | string (64) | An identifying string. For a user, the id must be unique for a WBO within a collection, though objects in different collections may have the same ID. This should be exactly 12 characters from the base64url alphabet. While not enforced by the server, the Firefox client expects this in most cases. |

modified | time submitted | float (2 decimals) | The last-modified date, in seconds since 1970-01-01. Set automatically by the server. |

sortindex | none | integer | Indicates the relative importance of this item in the collection. |

payload | none | string (256k) | A JSON structure encapsulating the data of the record. Defined separately per WBO type. Parts may be encrypted and include decryption metadata. |

ttl | none | integer | Number of seconds to keep this record. After expiration, it will not be returned. |

parentid | none | string (64) | The id of a parent object in the same collection. Used to create hierarchical structures. (Deprecated) |

predecessorid | none | string (64) | The id of a predecessor in the same collection. Used to create linked-list-like structures. (Deprecated) |

Notes:

- Deprecated fields are likely to be removed in future versions.

- See ECMA-262 for timestamp definition: http://www.ecma-international.org/publications/standards/Ecma-262.htm

Sample

{

"id": "-F_Szdjg3GzY",

"modified": 1278109839.96,

"sortindex": 140,

"payload": "{\"ciphertext\":\"e2zLWJYX/iTw3WXQqffo00kuuut0Sk3G7erqXD8c65S5QfB85rqolFAU0r72GbbLkS7ZBpcpmAvX6LckEBBhQPyMt7lJzfwCUxIN/uCTpwlf9MvioGX0d4uk3G8h1YZvrEs45hWngKKf7dTqOxaJ6kGp507A6AvCUVuT7jzG70fvTCIFyemV+Rn80rgzHHDlVy4FYti6tDkmhx8t6OMnH9o/ax/3B2cM+6J2Frj6Q83OEW/QBC8Q6/XHgtJJlFi6fKWrG+XtFxS2/AazbkAMWgPfhZvIGVwkM2HeZtiuRLM=\",\"IV\":\"GluQHjEH65G0gPk/d/OGmg==\",\"hmac\":\"c550f20a784cab566f8b2223e546c3abbd52e2709e74e4e9902faad8611aa289\"}"

}```

## Collections

Each WBO is assigned to a collection with related WBOs. Collection names may

only contain alphanumeric characters, period, underscore, and hyphen.

Default Mozilla collections:

- bookmarks

- history

- forms

- prefs

- tabs

- passwords

Internal-use collections:

- clients

- crypto

- keys

- meta

## URL Semantics

Storage URLs generally follow REST semantics. Request and response bodies are

JSON-encoded.

URL structure:

`https://<server name>/<api pathname>/<version>/<username>/<further instruction>`

| Component | Mozilla Default | Description |

|----------|-----------------|-------------|

| server name | defined by user account | Hostname of the server |

| pathname | none | Prefix associated with the service |

| version | 1.1 | API version |

| username | none | User identifier |

| further instruction | none | Function-specific path |

Certain functions use HTTP Basic Authentication over SSL. If the authentication

username does not match the username in the path, an error response is returned.

## APIs

### GET

`GET /info/collections`

Returns collections and their last-modified timestamps.

`GET /info/collection_usage`

Returns collections and storage usage (KB).

`GET /info/collection_counts`

Returns collections and item counts.

`GET /info/quota`

Returns current usage and quota (KB).

`GET /storage/<collection>`

Returns WBO ids in a collection. Optional parameters:

- ids

- predecessorid (deprecated)

- parentid (deprecated)

- older

- newer

- full

- index_above

- index_below

- limit

- offset

- sort (oldest, newest, index)

Alternate output formats via `Accept` header:

- application/whoisi

- application/newlines

`GET /storage/<collection>/<id>`

Returns the requested WBO.

### PUT

`PUT /storage/<collection>/<id>`

Adds or updates a WBO. Metadata-only update if no payload is provided.

Returns the modification timestamp.

### POST

`POST /storage/<collection>`

Bulk upload of WBOs with a shared timestamp.

Sample response:

```json

{

"modified": 1233702554.25,

"success": ["{GXS58IDC}12", "{GXS58IDC}13"],

"failed": {

"{GXS58IDC}11": ["invalid parentid"]

}

}

DELETE

DELETE /storage/<collection>

Deletes a collection or selected items.

DELETE /storage/<collection>/<id>

Deletes a single WBO.

DELETE /storage

Deletes all user records. Requires X-Confirm-Delete.

All delete operations return a timestamp.

Headers

Retry-After

Used with HTTP 503 to indicate maintenance duration.

X-Weave-Backoff

Indicates server overload; client should delay sync (usually 1800 seconds).

X-If-Unmodified-Since

Fails write requests if the collection was modified since the given timestamp.

X-Weave-Alert

Human-readable warning or informational messages.

X-Weave-Timestamp

Current server timestamp; also modification time for PUT/POST.

X-Weave-Records

If supported, returns the number of records in a multi-record GET response.

HTTP Status Codes

200

Request processed successfully.

400

Invalid request or data. Response includes a numeric error code.

401

Invalid credentials, possibly due to node reassignment or password change.

404

Resource not found. Returned for missing records or empty collections.

503

Server maintenance or overload. Used with Retry-After.

Storage API v1.0 (Obsolete)

This document describes the legacy Sync Server Storage API, version 1.0. It has been superseded by Sync API v1.5.

Weave Basic Object (WBO)

A Weave Basic Object is the generic wrapper around all items passed into and out of the Weave server. The Weave Basic Object has the following fields:

| Parameter | Default | Max | Description |

|---|---|---|---|

| id | required | 64 | An identifying string. For a user, the id must be unique for a WBO within a collection, though objects in different collections may have the same ID. Ids should be ASCII and not contain commas. |

| parentid | none | 64 | The id of a parent object in the same collection. This allows for the creation of hierarchical structures (such as folders). |

| predecessorid | none | 64 | The id of a predecessor in the same collection. This allows for the creation of linked-list-esque structures. |

| modified | time submitted | float (2 decimal places) | The last-modified date, in seconds since 1970-01-01 (UNIX epoch time). Set by the server. |

| sortindex | none | 256K | A string containing a JSON structure encapsulating the data of the record. This structure is defined separately for each WBO type. Parts of the structure may be encrypted, in which case the structure should also specify a record for decryption. |

| payload | none | 256K | The record payload. |

Reference: http://www.ecma-international.org/publications/standards/Ecma-262.htm

Weave Basic Objects and all data passed into the Weave Server should be UTF-8 encoded.

Sample

{

"id": "B1549145-55CB-4A6B-9526-70D370821BB5",

"parentid": "88C3865F-05A6-4E5C-8867-0FAC9AE264FC",

"modified": "2454725.98",

"payload": "{\"encryption\":\"http://server/prefix/version/user/crypto-meta/B1549145-55CB-4A6B-9526-70D370821BB5\", \"data\": \"a89sdmawo58aqlva.8vj2w9fmq2af8vamva98fgqamff...\"}"

}

Collections

Each WBO is assigned to a collection with other related WBOs. Collection names may only contain alphanumeric characters, period, underscore and hyphen.

Collections supported at this time are:

- bookmarks

- history

- forms

- prefs

- tabs

- passwords

Additionally, the following collections are supported for internal Weave client use:

- clients

- crypto

- keys

- meta

URL Semantics

Weave URLs follow, for the most part, REST semantics. Request and response bodies are all JSON-encoded.

The URL for Weave Storage requests is structured as follows:

https://<server name>/<api pathname>/<version>/<username>/<further instruction>

| Component | Mozilla Default | Description |

|---|---|---|

| server name | defined by user account node | the hostname of the server |

| pathname | none | the prefix associated with the service on the box |

| version | 1.0 | The API version. May be integer or decimal |

| username | none | The name of the object (user) to be manipulated |

| further instruction | none | The additional function information as defined in the paths below |

Weave uses HTTP basic auth (over SSL). If the auth username does not match the username in the path, the server will issue an error response.

The Weave API has a set of Weave Response Codes to cover errors in the request or on the server side.

GET

info/collections

GET /<version>/<username>/info/collections

Returns a hash of collections associated with the account, along with the last modified timestamp for each collection.

info/collection_counts

GET /<version>/<username>/info/collection_counts

Returns a hash of collections associated with the account, along with the total number of items for each collection.

info/quota

GET /<version>/<username>/info/quota

Returns a tuple containing the user’s current usage (in K) and quota.

storage/collection

GET /<version>/<username>/storage/<collection>

Returns a list of the WBO ids contained in a collection.

Optional parameters:

- ids

- predecessorid

- parentid

- older

- newer

- full

- index_above

- index_below

- limit

- offset

- sort (oldest, newest, index)

storage/collection/id

GET /<version>/<username>/storage/<collection>/<id>

Returns the WBO in the collection corresponding to the requested id.

Alternate Output Formats

Triggered by the Accept header:

- application/whoisi: each record consists of a 32-bit integer defining the length of the record, followed by the JSON record

- application/newlines: each record is a separate JSON object on its own line; newlines in the body are replaced by \u000a

APIs

PUT

PUT /<version>/<username>/storage/<collection>/<id>

Adds or updates a WBO. Without a payload, only metadata fields are updated.

Returns the modification timestamp.

POST

POST /<version>/<username>/storage/<collection>

Takes an array of WBOs and performs atomic PUTs with a shared timestamp.

Example response:

{

"modified": 1233702554.25,

"success": ["{GXS58IDC}12","{GXS58IDC}13"],

"failed": {

"{GXS58IDC}11": ["invalid parentid"]

}

}

DELETE

DELETE /<version>/<username>/storage/<collection>

Deletes the collection or selected items.

DELETE /<version>/<username>/storage/<collection>/<id>

Deletes a single WBO.

DELETE /<version>/<username>/storage

Deletes all records for the user. Requires X-Confirm-Delete.

All delete operations return a timestamp.

General Weave Headers

X-Weave-Backoff

Indicates server overload. Client should retry after the specified seconds.

X-If-Unmodified-Since

Fails write requests if the collection has changed since the given timestamp.

X-Weave-Alert

Human-readable warnings or informational messages.

X-Weave-Timestamp

Server timestamp; also the modification time for PUT/POST requests.

X-Weave-Records

If supported, returns the number of records in a multi-record GET response.

Syncstorage Postgres Backend

Tables Overview

| Table | Description |

|---|---|

user_collections | Per-user metadata about each collection, including last_modified, record count, and total size |

bsos | Stores Basic Storage Objects (BSOs) that represent synced records |

collections | Maps collection names to their stable IDs |

batches | Temporary staging of BSOs in batch uploads |

batch_bsos | Stores BSOs that are part of a batch, pending commit |

User Collection Table

Stores per-user, per-collection metadata.

| Column | Type | Description |

|---|---|---|

user_id | BIGINT | The user id (assigned by Tokenserver). PK (part 1) |