Note

This article was translated from French by Julien/Sphinx. We thank him a lot for this effort! Note, that at the beginning of this year, he also translated MDN to French! Big up !

tl;dr We have to build a service to track payments, and we're hesitant to go on with our own solution for storage and synchronization.

As we wrote in the previous article (FR), we want to build a solution for generic data storage. We're rebooting Daybed at Mozilla!

Our goal is simple: allow developers, whether they are from Mozilla or from the whole world, to easily synchronize and save data associated to a user.

Here are the aspects of the architecture that seem essential to us:

- The solution must rely on a protocol, not on a particular implementation;

- Self-hosting of the whole solution must be dead simple;

- Authentication must be pluggable or decentralized (OAuth2, FxA, Persona);

- The server should be able to validate records;

- An authorization/permissions system must allow collection protection or fine-grained record sharing;

- Conflict resolution could happen server-side;

- Clients should be "offline-first";

- Clients should be able to easily merge/reconcile data;

- Clients should be usable in the browser and server-side;

- Every component should be simple and easily substitutable.

The first question we were asked was: "Why aren't you just using PouchDB or Remote Storage?"

Remote Storage

Remote Storage is an open standard for user storage. The specification is based on existing and proven standards: Webfinger, OAuth2, CORS and REST.

The API is pretty simple and prestigious projects are using it. There exist several server implementations and there is even a Node skeleton to build a custom server.

The remoteStorage.js client allows us to integrate this solution into Web apps. This client is in charge of the "local store", caching, syncing and providing users a widget so that they can choose the server which will receive the data (using Webfinger).

ludbud, a refined version of remoteStorage.js limits itself to the abstraction of the remote data storage. At the end, it would be possible to have a single library and to store data in either a Remote Storage server, an ownCloud server, or even on the bad guys' like Google Drive or Dropbox.

At first glance, the specification seemed to fit with what we want to do:

- The approach taken by the protocol is sound;

- The ecosystem is well thought out;

- The political vision fits: give back the control of the data to the users (see unhosted);

- Technical choices are compatible with what we've already started (CORS, REST, OAuth2);

However, regarding data manipulation, there are several differences with what we want to do:

- The API seems oriented around "files" (folders and documents) and not "data" (collections and records);

- There is no record validation following some schema (though some implementations of the protocol are actually doing this);

- There is no option to sort/filter records with regards to their attributes;

- The permission system is limited to private/public (and the author is going for a git-like model) [1];

To summarize, it would seem that what we want to achieve with the storage of records is complementary to Remote Storage.

If there are some needs about "file oriented" persistence, it would be dull to reinvent this solution. So there is a great chance that we will integrate Remote Storage some day and that it will become a facet of our solution.

PouchDB

PouchDB is a Javascript library allowing to manipulate records locally and synchronize them to a distant database.

var db = new PouchDB('dbname');

db.put({

_id: 'dave@gmail.com',

name: 'David',

age: 68

});

db.replicate.to('http://example.com/mydb');

The project is seeing some traction and benefits from a lot of contributors. The ecosystem is rich and adoption by projects such as Hoodie confirms the tool's relevance for frontend developers.

PouchDB handles a local "store" whose persistence is built on top of the LevelDown API to persist data in any backend.

Even if PouchDB is mainly done for "offline-first" applications, it can be used inside browsers and on the server side, via Node.

Synchronization

Local data synchronization (or replication) is done on a remote CouchDB.

The PouchDB Server project implements the CouchDB API in NodeJS. Because PouchDB itself is used, we obtain a service which is behaving like a CouchDB, but stores data anywhere (in a Redis or a PostgreSQL database for instance).

The synchronisation is complete. In other words, all records that are on the server will end up being synchronised with the client. It is possible to filter synchronized collections but its purpose is not to secure data access.

In order to do so, it is recommended to create a database per user.

This isn't necessarily a problem since CouchDB can handle hundreds of thousands of databases without any problem. However, depending on use cases, clustering and isolation (by role, application, collection, ...) might not be dealt with easily.

The "Payments" use case

During the next weeks, we will have to setup a prototype that keeps an history of a user's payments and subscriptions.

The requirements are simple:

- The "Payment" application tracks payments and subscriptions of a user for a given application;

- The "Data" application consults the service to check if a user paid or has subscribed;

- The user consults the service to get a list of all payments/subscriptions related to her.

Only the "Payment" application should have the right to create/modify/delete records. The two others can only have read-only access to these records.

A given application cannot access to some other application payments and a given user cannot access to some other user's payments.

With RemoteStorage

The idea of Remote Storage is to separate the application from the data that the user created with the application.

In our use case, the "Payment" app is dealing with the data concerning a user. However, this data does not directly belong to the user. A user should be able to delete some records but he/she cannot create or edit some existing payments!

The concept of permissions, limited to private/public is not suitable here.

With PouchDB

It will be necessary to create a database per user in order to separate the records in a secure way. Only the "Payment" app will be granted full rights on the different databases.

But this won't be enough.

An app must not see payments from another application so it would also be necessary to create a database per application.

When a user will need to access payments, it will be mandatory to join every database of every application for this current user. When the marketing department will want to build stats for all apps, one will have to join hundreds of thousands of databases.

This doesn't seem appropriate: most of the time, there are only few payments/subscriptions for a given user. Should we have hundreds of thousands of databases, each of which will have less than 5 records?

Moreover, the server side of "Payment" is implemented with Python. Using a JavaScript wrapper (as python-pouchdb) would not thrill us.

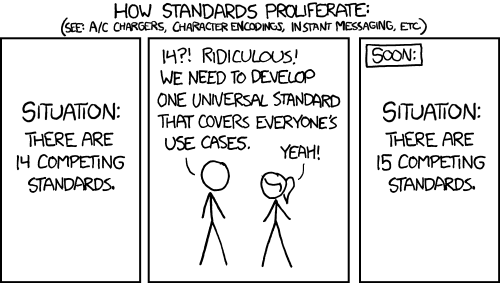

A new ecosystem?

It is obvious that PouchDB and Remote Storage are rich projects with dynamic communities. Therefore, it's reasonable to wonder if one should develop another solution.

When we created the Reading List server, we built it with Cliquet. We had a chance to setup a very simple protocol, strongly inspired by Firefox Sync, to sync records.

The reason clients for Reading List were implemented in few weeks, whether in JavaScript, Java (Android) or ASM (Firefox addon), is that the "offline first" principle of the service is trivial.

Tradeoffs

Of course, we don't intend to compete with CouchDB and are making some concessions:

- By default, record collections are isolated by user;

- There is no history of revisions;

- There is no diff between each revision;

- By default, there is no automatic conflict resolution;

- There is no stream synchronization.

If we are not mistaken, these tradeoffs exclude the possibility of implementing a PouchDB adapter for the HTTP-based synchronisation protocol of Cliquet.

Too bad since it would have been a great opportunity to capitalize on the user experience of PouchDB regarding the synchronisation client.

However, we have some interesting features:

- No map-reduce;

- Partial and/or ordered and/or paginated synchronisation;

- The client can choose, with headers, to delete the data or to accept the server version;

- A single server is deployed for N apps;

- Self hosting is dead simple;

- The client can choose not to use local storage at all;

- The JavaScript client will have its local store management delegated (we're thinking about LocalForage or Dexie.js);

And we are complying with the specs we drew at the beginning of the article!

Philosophical arguments

It's unrealistic to think that we can achieve everything with a single tool.

We have other use cases that seem to fit with PouchDB (no concept of permission or sharing, JavaScript environment, ...). We'll take advantage of it when relevant!

The ecosystem we want to build should address the use cases that are badly handled by PouchDB. It should be:

- Based on our very simple protocol;

- Minimalist and with multiple purposes (like our very French 2CV);

- Naive (no rocket-science);

- Without magic (explicit and easy to reimplement from scratch);

The philosophy and the features of our Python toolkit, Cliquet, will of course be honoured :)

As for Remote Storage, whenever we face the need, we will proud to join this initiative. However, as for now, it seems risky to start by bending the solution to our own needs.

Practical arguments

Before being willingly to deploy a CouchDB solution, Mozilla ops will ask us to precisely prove that it's not doable with stacks we already have running internally (e.g. MySQL, Redis, PostgreSQL).

We will also have to guarantee a minimum 5 years lifetime regarding the data. With Cliquet, using the PostgreSQL backend, our data is persisted in a flat PostgreSQL schema.

This wouldn't be the case with a LevelDown adapter that handles revisions split in a key-value scheme.

If we base our service on Cliquet, like we did with Kinto, all the automation work of deploying (monitoring, RPM builds, Puppet...) that was done for Reading List will be completely reusable.

As said before, if we go with another totally new stack, we will have to start again from scratch, including productionalizing, profiling, optimizing, all of which has already been done during the first quarter of this year for Reading List.

Next steps

It's still time to change our strategy :) And we welcome any feedback! It's always a difficult decision to make... </troll call>

- Twist an existing ecosystem vs build a new custom one;

- Master the whole system or to integrate our solution;

- Contribute vs redo;

- Guide vs follow.

We really seek to join the no-backend initiative. This first step might lead us to converge in the end! Maybe our service will end up being compatible with Remote Storage, maybe PouchDB will become more agnostic regarding the synchronisation protocol...

Using this new ecosystem for the "Payments" project will allow us to setup a suitable permission system (probably built on OAuth scopes). We are also looking forward to capitalizing on our Daybed experience for this project.

We'll also extract some parts of the clients source code that were implemented for Reading List in order to provide a minimalist JavaScript client.

By going this way, we are taking several risks:

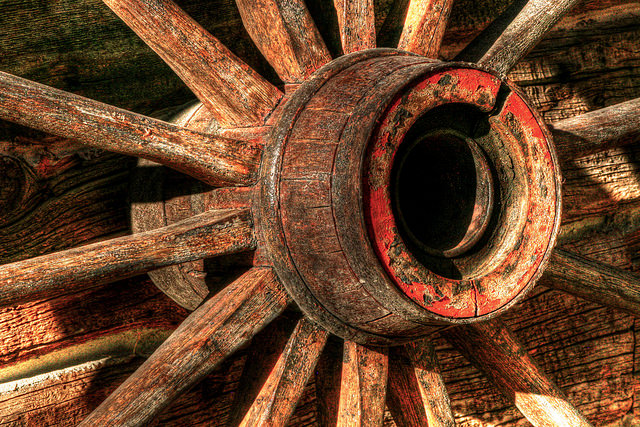

- reinventing a wheel we don't know;

- failing to make the Cliquet ecosystem a community project;

- failing to place Cliquet in the niche for the use cases that are not covered with PouchDB :)

Rolling out your set of webservices, push notifications, or background services might give you more control, but at the same time it will force you to engineer, write, test, and maintain a whole new ecosystem.

And this ecosystem is precisely the one that Mozilla Cloud Services team is in charge of!

| [1] | The Sharesome project allows for some public sharing of one's resources from one's Remote Storage. |